Impact Factor : 0.548

- NLM ID: 101723284

- OCoLC: 999826537

- LCCN: 2017202541

Eman Ali Elzain Mohamed1* and Elmontasir Taha Mohamed2

Received: January 18, 2023; Published: February 10, 2023

*Corresponding author: Eman Ali Elzain, Health Professions Education, Education Development and Research Centre, Faculty of Medicine, International University of Africa, Sudan

DOI: 10.26717/BJSTR.2023.48.007673

Introduction: The process in which one or more examiners fire questions at the candidate is called the Structured Viva exam, another definition Viva-voce exam is assessment of student learning that is conducted, solely or in part, by word of mouth. It dominated assessment up until at least the 18th century at Oxford and Cambridge. The aim of this study to assess the potential of structured viva voce examinations as a medical school teaching tool for evaluating the performance of Medical Laboratory 4th year Students’ Knowledge in the International University of Africa, Khartoum, Sudan 2021.

Materials and Methods: A descriptive cross-sectional study. Held in the Faculty of medical laboratory science the International University of Africa, Khartoum, Sudan. Medical laboratory students of the fourth years who were available at the time of the study. February 2021 to July 2021. A self-administered questionnaire by Google form and by paper were used which was designed based on the variables and objectives of this study. Measures to grantee the quality of data is the reviewing of the questionnaire by the Department of Medical Education in the faculty.

Results: Total number of students was 47 students, 13 were male 34 were female. They distributed through three departments, 19 from Hematology, 16 from Microbiology and 12 from Clinical chemistry. Most of the students significantly give a score more than the pass score (5 for oral and 20 for MCQs mean and percentage were 7.40/10 (74.0%), 35.14/40 (87.8%), respectively). The students significantly disagree with the development of the interpretation skills by the MCQs exam and considering the oral exam as an interesting experiment. Also, they showed disagreement with the question (It was clear to me how this exam would be assessed) in the oral exam. The students significantly agree with the question (Completing this experiment has increased my understanding of my specialist) in oral exam. Most of the students significantly disagree with the availability of Sufficient background information of an appropriate standard is provided in the introduction for MCQs exam. Significantly strongly agree of the students with the offering an effective support and guidance by the demonstrators and friendly environment of oral exam. The students significantly disagree with the explaining of the experimental procedure of oral exam in the beginning. Also the students revealed that there were low anxious and depression about the questions and the absence of bias either gender or ethnic bias. The time given for all students in each question is enough and they were strongly agree with this. The mean of the scores of Oral and MCQs among showed Different Departments 7.3±1.53, 7.4±1.38 and 7.6±1.50 respectively for Microbiology, Hematology and Clinical chemistry for oral exam and 33.9±4.04, 35.9±3.58, 35.6±4.25 for MCQs exam which showed statistically insignificant difference between the three departments for both exams (p. value= 0.882, 0.316) for oral and MCQs respectively. Also showed insignificant difference in the comparison of oral and MCQs among gender (P. value= 0.679 and 0.803) respectively.

Conclusions: In conclusion, the structured viva exam or SOE has an impact on examinations scores but not better than MCQs. If used as complementary tool of assessment it provides benefits in assessing or expanding medical student knowledge but not performance

Keywords: Structured Viva Voce; Assessment; Medical Laboratory Students

Assessment is one of the most important components of each educational program, and if accomplished well, can improve students’ motivation for learning and provide educators with useful feedback [1]. Learning assessment is often one of the most difficult and timeconsuming aspects of education, greatly affecting students’ studying mode [2]. Conventionally, Assessment motivates individuals to learn and practice the subject and helps to determine whether teaching and learning methods have achieved the learning objectives or outcomes [3]. Many types of assessments can be used in health professions education like: Written tests, Performance tests, Observational and interpretational assessment and Miscellaneous tests (like Portfolio … etc) [4]. Viva voce or Oral assessment in its many forms has a long history. It dominated assessment up until at least the 18th century at Oxford and Cambridge and continues to be a principal mode of assessment in many European countries. Elsewhere, and certainly in the UK and Australia, it typically takes the form of an interview or discussion between the examiners and candidate, have been used as a method of assessment for centuries, viva voce exams offer the prospect of an interactive conversation where students can express their knowledge in a variety of ways while asking clarifying questions. Oral exams are, as an alternative, intended to foster unscripted discussion between the evaluator and student. An individual student (or, sometimes, a group of students) meets face-to-face with the evaluator at their own unique time, separate from the rest of the class and happens in an examination hall or other such setting away from patients.

It should be distinguished from other types of oral examination such as the long and short case or OSCE, which take place in the presence of the patient or are focused around a patient seen by the candidate and the oral that is used for defense of written work such as a thesis. The oral examination is said to assess knowledge, to probe depth of knowledge and to test other qualities such as mental agility [5] (Figure 1). The student(s) are then asked a smaller set of questions, which they answer orally, possibly with the occasional use of paper or whiteboard. Oral exams are typically graded during or immediately after the exam (“on-the-fly” in some fashion). Importantly, students’ awareness of conventional structure of questions has replaced question-reading with thinking during the learning process; and also, the approach to question-reading has frantically reduced welcoming class sessions which is an environment for communicating thoughts, analyzing data, and even teaching research [6]. Oral exams were used not as a substitute, but as a complement to written exams. They are a way to ask what is not feasible through the written format [7]. On other hand Oral exams have many benefits like: probing into candidates understanding of signs, symptoms and clinical reasoning with regards to a case, exploring topics and exposing to interactions [8]. When compared it with other assessment tool as MCQs, is difficult and time consuming but it provides problem solving and decision making level [9] (Figure 1).

In designing these questions, it should be noted that these questions must include the cognitive educational purposes, which are mentioned in the lesson plan [10,11]. The aim of this study was to assess the potential of oral examinations as a medical school teaching tool for evaluating the performance of Medical Laboratory 4th year Students’ Knowledge in the International University of Africa, Khartoum, Sudan 2021. And we also aim to assess our implementation of oral exams in undergraduate courses of the final year. And to reach the result of student’s evaluation of the medical laboratory exam in Sudan and compare them with the studies they have reached internationally in the medical fields, the lack of importance of this information was supplemented to the student and the teacher similar by comparison with these studies. In addition, a goal of this study was to determine the usefulness of the oral in medical student assessment by evaluating the exam’s relationship to student performance on other required block-based examinations (MCQs).

Justification

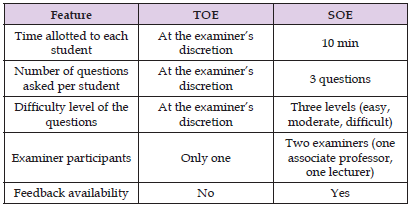

student learning is driven by assessment and assessment is important to the student’s experience and the way of evaluation in students’ learning affects their studying mode, We conduct this study to highlight the areas that concerned about the effectiveness and assessment properties of oral exams, our findings may help avoid duplication of assessments and results in the future, which may help reduce unnecessary efforts for the faculty to take a correct decision about designing or not, other assessment tools that assess the same cognitive skills in a single subject. There is a strong need to propose a solution which is reliable and valid for oral exams. Also, we try to assess the present system of oral examination (viva voce) in our institution and to propose a solution to improve validity of the same (Table 1).

Table 1: Comparison of the Main Characteristics of Traditional Oral Examination (TOE) and Structured Oral Examination (SOE) [12].

Study Design

A descriptive cross-sectional study.

Study Area:

Faculty of medical laboratory science/the international university of Africa, Khartoum, Sudan. Belong to the federation of the universities of the Islamic world. It has a group of faculties of education and humanities, shariah and Islamic studies, applied science, in addition to medicine and engineering.

Study Population

Target population were undergraduate students of Medical Laboratory Faculty (fourth year) of International University of Africa who attend the final Oral exam and who respond to the questionnaire. Present year (63 students) were learn subject of MLS for 3 years, in the last year they were take one specialty (Hematology, Microbiology, Clinical chemistry, Histopathology and Parasitology). They have to appear for the oral exams in their specialty subject as prescribed in the syllabus which is non-compensatory. The scores of participated students in first sessional examination (MCQs) were taken from the documentation of the faculty.

Sample Size

Sample size covered all the volunteers’ final year student who attend the oral exam and their exam scores which were the Oral and MCQs exam from 2020 to 2021.

Sampling Technique: The Oral exam constructed in last 2 courses of the last year of the Medical Laboratory sciences faculty/ International University of Africa, 50% of the topic was blood banking and 50% was blood coagulation. Of course, the types of questions were related to the same topics but differ greatly between the individual students. We also consider the flexibility, consistency and equity in designing the Oral exam questions. A cross sectional study was performed on 47 fourth year medical laboratory students who finished the last year 2 semester each one 15 weeks. This sample include volunteers from three departments (Hematology, Microbiology and Clinical Chemistry) between 2020- 2021. Their participation was voluntary and were also provided informed consent. Also the present study made to the same sample of students, and the questions was constructed on the same topics. Alignment of the final grade for those students was modified because the SOE was 10% and the MCQs were 40%. Two examining attending or resident administered a 10-minute SOE to each student. Each examiner assigned a grade and these were averaged to give a final SOE score. All student scores from the SOE exam were collected and student identifiers were removed. MCQ test was given to examinees, in the framework of a computerbased learning system. Scores were averaged to assess the potential of the SOE on assessing students’ performance. Statistical analyses were performed using SPSS 23.0. Independent sample T- tests were performed to compare the mean of the scores between the SOE and MCQs and α ≤ 0.05 was considered statistically significant. The final exam consists of two written papers, two practical exams and oral exam.

Data Collection Methods

A self-administered questionnaire by Google form and by paper were used which was designed based on the variables and objectives of this study. Measures to grantee the quality of data is the reviewing of the questionnaire by the Department of Medical Education in the faculty.

Data Analysis

The scores of students were sort as scores of oral exam then cleaning from each far score and summarized on Excel sheet. The minimum, maximum, mean and standard deviations of obtained marks will calculate from percentage scores which collected from data and from student feedback, using IBM SPSS Statistics for Windows, version 23.0 [12].

Ethical Consideration

Ethical approvals were obtained from research unit- educational development Centre. Written consent was taken secrecy from all participants in this study. The Oral exam questions were constructed by well-trained senior faculty staff to improve the reliability and quality standards and to sustain privacy and confidentiality.

Total number of students induced in this study in the clerkship period was 47 students, 13 were male 34 were female (Table 1). They distributed through three departments, Hematology, Microbiology and Clinical chemistry (19, 16, and 12 respectively) (Table 2).

Performance of Medical Laboratory 4th Year Students

The mean, standard deviation, and percentage of oral and MCQs exam score were 7.40/10 ±1.44 (74.0%), 35.14/40 ±3.91 (87.8%), respectively (Table 3). most of the students significantly give a score more than the pass score (5 for oral and 20 for MCQs) (p = 0.00) (Table 4).

Note:

• One sample t- test

• Variables= Oral and MCQs.

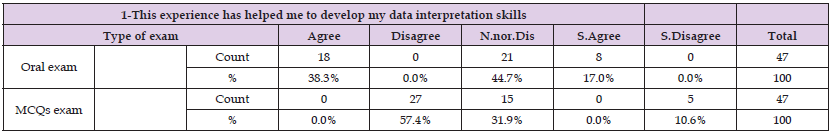

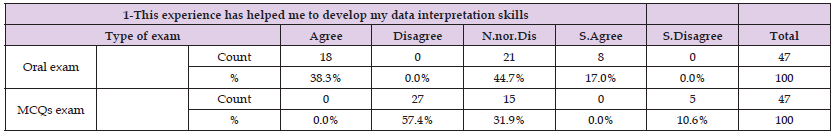

Table 4: Association of Type of Exam with the Question of (This Experience has helped me to Develop my Data Interpretation Skills).

Note: P value 0.000

Student Satisfaction about this Experiment

Table 5: Association of type of exam with the question of (This experience has helped me to develop my data laboratory skills).

Note: P value 0.000

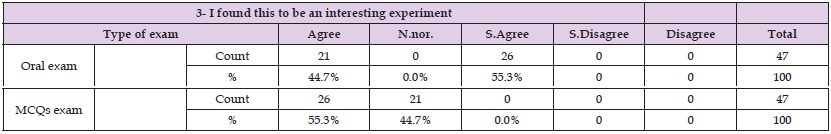

Table 6: Association of type of exam with the question of (I found this to be an interesting experiment).

Note: P value 0.000

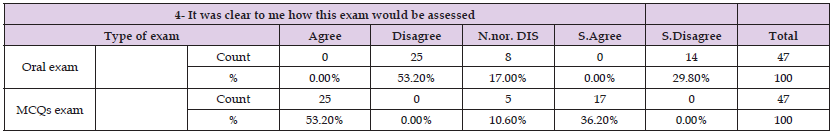

Table 7: Association of Type of Exam with the Question of (It was clear to me how this exam would be assessed).

Note: P value 0.000

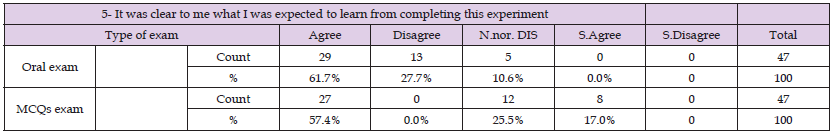

Table 8: Association of Type of Exam with the Question of (It was clear to me what I was expected to learn from completing this experiment).

Note: P value 0.000

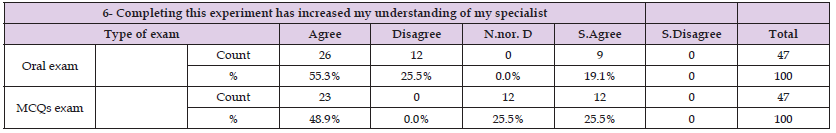

Table 9: Association of Type of Exam with the Question of (Completing this experiment has increased my understanding of my specialist).

Note: P value 0.000

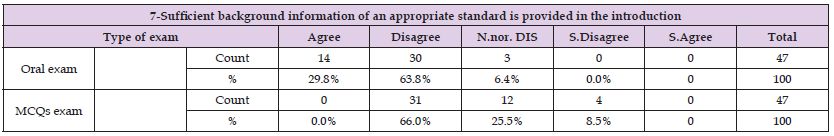

Table 10: Association of Type of Exam with the Question of (Sufficient background information of an appropriate standard is provided in the introduction.

Note: P value 0.000

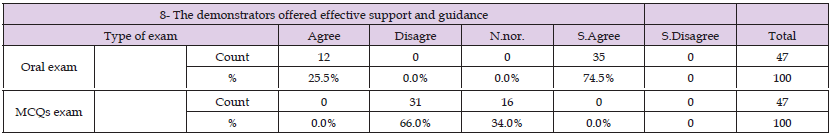

Table 11: Association of Type of Exam with the Question of (The demonstrators offered effective support and guidance).

Note: P value 0.000

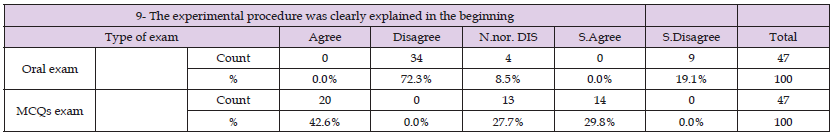

Table 12: Association of Type of Exam with the Question of (The experimental procedure was clearly explained in the beginning).

Note: P value 0.000

The feedback questionnaire of the students’ views on the SOE and MCQs was analyzed by applying the paired t test. Each response of the items in the questionnaire was depicted as numerical data by Likert scale, and the mean ± SD was listed. The analysis of the questionnaire showed that there is significant difference in the student opinion between SOE and MCQs. The students significantly disagree with the development of the interpretation skills by the experience of MCQs exam (p. value= 0.00) (Table 5). Most students showed no agree nor disagree with development of the laboratory skills by the oral exam experience (p value= 0.00) (Table 6). The students strongly agree with considering the oral exam as an interesting experiment (p. value= 0.00) (Table 7). The opinion of the students showed significantly disagree with the question (It was clear to me how this exam would be assessed) in the oral exam (p. value= 0.00) (Table 8). It was clear for the students to expect what they would learn from the oral exam experiment (p. value= 0.00) (Table 9). The students significantly agree with the question (Completing this experiment has increased my understanding of my specialist) in oral exam (p. value= 0.00) (Table 10). Most of the students significantly disagree with the availability of Sufficient background information of an appropriate standard is provided in the introduction for MCQs exam (p. value= 0.00) (Table 11). Significantly strongly agree of the students with the offering an effective support and guidance by the demonstrators of oral exam (p. value= 0.00) (Table 12).

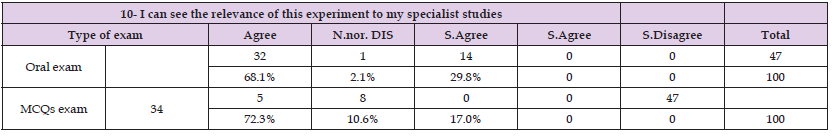

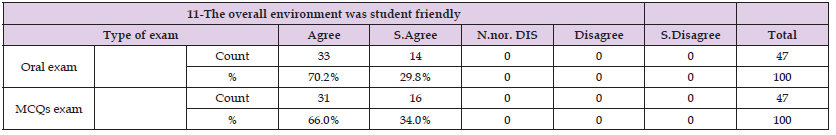

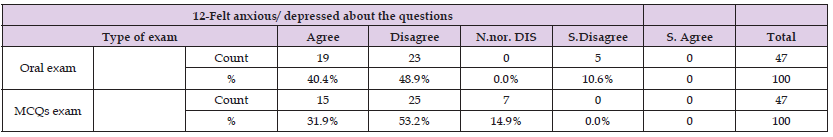

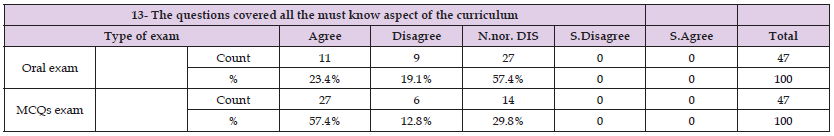

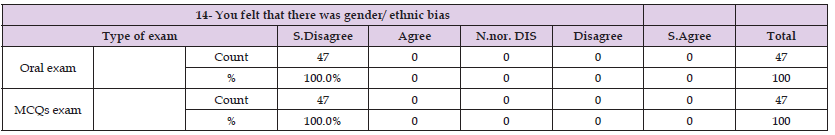

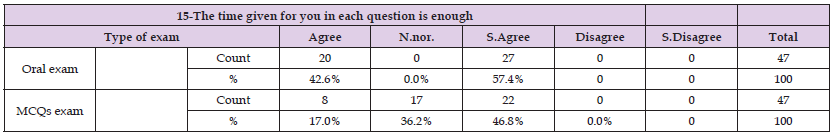

The students significantly dis agree with the explaining of the experimental procedure of oral exam in the beginning (p. value= 0.00) (Table 13). (I can see the relevance of this experiment to my specialist studies) student’s opinion strongly showed agreement with this question (p. value= 0.00) (Table 14). The student’s opinion significantly agrees with the friendly environment of the oral exam (p. value= 0.00) (Table 15). Most of the student’s opinions significantly disagree with the (Felt anxious/ depressed about the questions) (p. value= 0.00) (Table 16). The student’s opinion showed neither agree nor disagree with covering all the aspect of the curriculum (p. value= 0.00) (Table 17). All the students strongly disagree with the presence of bias either gender or ethnic bias (p. value= 0.00) (Table 18). The time given for all students in each question is enough and they were strongly agreeing with this (p. value= 0.00) (Table 19). This finding seems to be agreed with study done by Wang et al, 2020 their results showed that students were satisfied with SOE. They reflected that the environment is friendly and less confronting during SOE. The high uniformity of questions to the examinees minimized the “carryover effect” in SOE compared with TOE. Less anxiety in the process of SOE was appealing among students. There were no students who agreed that any gender bias existed during both oral sessions. More students agreed that the SOE improved their communication skills in the medical language. Students felt more confident to conduct the practical and compose the results and discussion of the laboratory report. It seems that they managed to exploit physiology knowledge taught in the classroom in the experimental context. Now students addressed that they learned more from laboratory sessions. Even more, students would like to introduce SOE to other medical laboratory courses.

Table 13: Association of Type of Exam with the Question of (I can see the relevance of this experiment to my specialist studies).

Note: P value 0.000

Table 14: Association of Type of Exam with the Question of (The overall environment was student friendly).

Note: P value 0.000

Table 15: Association of Type of Exam with the Question of (Felt anxious/ depressed about the questions).

Note: P value 0.000

Table 16: Association of Type of Exam with the Question of (The questions covered all the must know aspect of the curriculum).

Note: P value 0.000

Table 17: Association of Type of Exam with the Question of (You felt that there was gender/ ethnic bias).

Note: P value 0.000

Table 18: Association of Type of Exam with the Question of (The time given for you in each question is enough).

Note: P value 0.000

• Crosstab

• P value less than 0.05 considered significant

Scores of Orals and MCQs among Different Departments and Gender

The mean of the scores of Orals and MCQs among showed Different Departments 7.3±1.53, 7.4±1.38 and 7.6±1.50 respectively for Microbiology, Hematology and Clinical chemistry for oral exam and 33.9±4.04, 35.9±3.58, 35.6±4.25 for MCQs exam which showed statistically insignificant difference between the three departments for both exams (p. value= 0.882, 0.316) for oral and MCQs respectively (Table 20). Also showed insignificant difference in the comparison of oral and MCQs among gender (P. value= 0.679 and 0.803) respectively (Table 20) (Figure 2).

Note:

• ANOVA t-test was used to calculate P value.

• P value less than 0.05 considered significant.

• Mean± Standard deviation.

• Minimum –Maximum between the brackets.

The present system of oral examination in our institution considered as SOE according to the classification done by Wang et al. 2020 who found that the students’ comments on the several openended items in the questionnaire, it was obvious that, overall, they were satisfied with SOE in theory and laboratory session. Students were more comfortable with SOE. They feel it is just that all students should be asked the same sets of predefined questions [13]. The performance of Medical Laboratory 4th year Students’ which can be indicated by the results of oral exam revealed that most of the students significantly gave a score more than the pass score which was seen also in the MCQs exam which indicated the performance of student in both exam was close to each other (5 for oral and 20 for MCQs) (p = 0.00) , this agree with study done by Wu et al. 2022, in spite of they differ from these study, they have two groups; SOE group and non-SOE group, they found that no statistically significant advantage to the SOE when we compare the group means of the National Board of Medical Examiners (NBME) subject exam of the students who did not participate in SOE compared to those who did [14]. In comparison between oral exam and written exams (MCQs) based on student’s opinion this study found that the student considered the oral exam as good opportunity for development of the interpretation skills while the laboratory skills can be develop in equal manner by both exams. On other hand, the opinion of students revealed that in the MCQs exam they were know how the assessment proceeded unlike oral exam.

It was clear for the students what they would learn from the oral exam unlike the MCQs exam and we think the explanation was the oral exam consumed time more than MCQs so the numbers of question is less and expected. For both oral and MCQs the students significantly disagreed with the availability of sufficient background information of an appropriate standard was provided in the introduction. The demonstrators offered effective support and guidance in oral exam more than MCQs exam and this may due to the nature of oral exam which was depend on the direct conversation between demonstrators and students. Most of the student’s opinions significantly disagreed with the (Felt anxious/ depressed about the questions) for both exams (p. value= 0.00) which agreed with study done by Davis et al. 2005 who asked The question: whether the oral exam is more stress provoking than other assessments, There is no evidence that orals are more stressful than other exams and, indeed, they found that there is anecdotal evidence to the contrary. in a personal narrative, reported that the short case was more stressful than other parts of the MRCP clinical examination [5]. The student’s opinion showed neither agree nor disagree with covering all the aspect of the curriculum (p. value= 0.00). All the students strongly disagreed with the presence of bias either gender or ethnic bias (p. value= 0.00). The time given for all students in each question is enough and they were strongly agree with this (p.value= 0.00).

The mean and standard deviation of the scores of Oral and MCQs among different departments showed 7.3±1.53, 7.4±1.38 and 7.6±1.50 respectively for Microbiology, Hematology and Clinical chemistry for oral exam and 33.9±4.04, 35.9±3.58, 35.6±4.25 for MCQs exam which showed statistically insignificant difference between the three departments for both exams (p. value= 0.882, 0.316) oral and MCQs respectively. Also showed insignificant difference in the comparison of oral and MCQs among gender (P. value= 0.679 and 0.803) male and female respectively. The orals’ preparation increased student motivation in learning their specialties. They were well informed that some questions would be related to the operation skill in the SOE. Thus they paid more attention to preview the operation procedure. So the good preparations of the SOE helped them to manage their information. More students felt competent in the laboratory. Students felt more confident to conduct the practical and compose the results and discussion of the laboratory report. It seems that they managed to exploit subject knowledge taught in the classroom in the experimental context. Now students addressed that they learned more from laboratory sessions. Even more, students would like to introduce SOE to other medical laboratory courses. Another study, done in an Indian setup in pharmacology, showed that students prefer SOE to TOE as it has minimal luck factor and reduced bias. Students reflect that they feel less anxious and depressed about the SOE due to its standardization and objectivity [15,16].

In conclusion,

1. The SOE or structured viva exam has an impact on examinations scores but not better than MCQs. It’s better to use as complementary tool of assessment.

2. The performance of students in oral and MCQs exam were not affect by the department or gender.

3. The students were satisfied with SOE in theory and laboratory session. Also, they were more comfortable with SOE. They feel it is just that all students should be asked the same sets of predefined questions.

No fund has been received.

All the data used in the study are available from the first and corresponding author on reasonable request.

Obtained from the research ethical committee in IUA.

All authors declare that they have no conflict of interest and no fund have been received.