Abstract

Gait analysis has established in recent years as an essential tool to quantify rehabilitation effectiveness. Nevertheless, available commercial systems need a structured environment, are very expensive, require an exact calibration and are not portable. Recently, it has been verified that Kinect can be used for kinematics assessment and has been placed side by side to commercial systems, taking advantage of the potentiality of both systems to provide more detailed analysis. The proposed method aims at having a low‐ cost, easy to use, markerless and portable Kinect‐based system that can perform gait analysis without needing an accurate calibration and a structured environment.

Keywords: Neuroscience; Neurorehabilitation; Motion Tracking; Kinect-Based System

Introduction

Gait analysis has established in recent years as an essential tool to quantify rehabilitation effectiveness. Gait assessment has become widespread in clinical practice to evaluate and implement physiotherapy treatments, in order to distinguish between disease entities and to customise rehabilitation programs [1].

Neurological and neurodegenerative diseases may alter normal gait patterns; for this reason, assessing kinematic and dynamic parameters during deambulation is crucial, in order to detect impairments underlying reduced motor functions [1]. BTS Gaitlab (BTS Bioengineering Corp. Quincy, MA 02169 USA) is a complete system for clinical gait analysis, which integrates kinematic, dynamic and electromyographic (EMG) parameters. With its 12 Vicon Infrared Cameras together with two force plats, 2 environmental cameras and wireless EMG probes, it can be considered as the gold standard for gait assessing. BTS system uses different protocols to acquire gait parameters and requires up to 22 markers, fixed on the subject’s body by an expert operator, according to well‐known positioning systems [2].

The Kinect v2 contains three main devices that work together to detect subject’s motion and to create the physical representation of the subject on the screen: an RGB colour VGA video camera, a depth sensor, and a multi‐array microphone. These components come together to detect and track 25 different points on each human body. Combining both hardware and software gives the Kinect the ability to generate 3D images and recognize human beings within its field of vision [3]. Recent studies [4‐11] showed that Kinect can be used for kinematics assessment going through the feasibility study for clinical use trying to automatize the step detection up to use commercial system together with the Kinect device to use the advantages of both systems. In this paper, the feasibility of a Kinect‐ based, marker less and easy‐to‐use system for gait assessment, by evaluating the accuracy of various gait parameters extracted by the system as compared to traditional gait analysis systems is evaluated. The methodology is validated emphasizing its advantages and its low‐cost and easy to use nature.

Experimental Validation

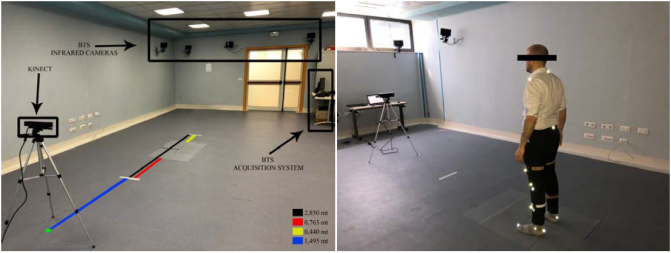

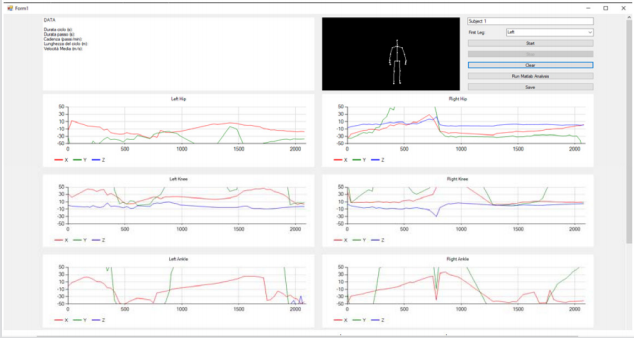

A Microsoft Kinect v2 (sampling frequency = 30 Hz), and 12 BTS Bioengineering Vicon cameras (sampling frequency = 120 Hz) were used to collect and analyse data. The Kinect camera was placed in front of the subject’s path, at 1.2 m height, as illustrated in Figure 1. During the experimental validation, one healthy subject (age 37, male, with no neurological and motor deficits) was asked to walk straight, covering an area of about 5 meters, delimited by predefined starting and ending points. The subject performed the task 12 times, both for Kinect and Vicon system, for a total of 24 acquisitions. Since both systems use infrared technology, data from Vicon cameras and from Kinect v2 were acquired in two different phases to avoid artifacts. A custom application has been developed in order to interface with the Kinect Sensor to detect the user’s skeleton and performs all the necessary computations, such as determining the joint positions, joint angles; converting 3D coordinates into 2D space (Figure 1). The application was developed using programming languages C# and WPF, using a third‐party library called Vitruvius [12], which provides useful functionality for analysis of body pose, joints, as well as the rotation of a segment in the 3D space and its distance from the Kinect. The application is extremely user‐friendly due to its intuitive graphical interface, as illustrated in the following Figure 2.

Figure 1: Experimental layout including Kinect v2 mounted on a tripod, and BTS Bioengineering system with twelve infrared cameras (a). Markers placed on the subject’s body (b).

Figure 2: Kinect interface: measures of joints angles (hip, knee and ankle) along the three axes X,Y,Z.

Before starting the acquisition, it is possible to set up the Name of the patient (that will be used later to create a folder containing all the outputs provided by the analysis) and the starting leg for the upcoming walk. Once the acquisition is started (pressing the button “Start”), it is possible to stop it in any moment (“Stop” button). It is also possible to clear the previous acquisition and save the current section. Once the acquisition ended, it is possible to compute all the parameters by clicking on “Run MATLAB Analysis” button. Beside these functionalities, the software allows also to see when the camera actually detects a skeleton and its position in the camera view. Once the acquisition starts, every time a new frame is detected from the Kinect camera a new point will be added to every graph simultaneously and displayed on the interface. Once the subject has performed the desired task, by pressing the “Stop” button, it is possible to save the section in a specific folder containing every graph data (saved both in jpg and csv format), and a PDF report containing all the graphs and a video of the performed task. After the acquisition the user can, by pressing the “Run MATLAB Analysis” button, pass the acquired data to a previously built MATLAB library that automatically perform the step detection and the gait analysis, computing different parameters as the average value among all the sections returning the needed parameters to the software and saving the gait analysis plot and trend to an automatically created folder for each subject and for each section performed by the latter (Figure 2).

Results

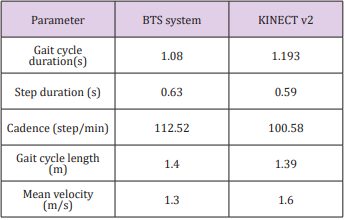

In order to demonstrate that the proposed system provides reliable measurements, results provided by the presented system and by BTS system have been compared. Different spatio‐temporal parameters and joint angles have been computed and compared, (Table 1).

Table 1: Kinematic measures computed with the two systems: the results are presented as mean value across 12 acquisitions

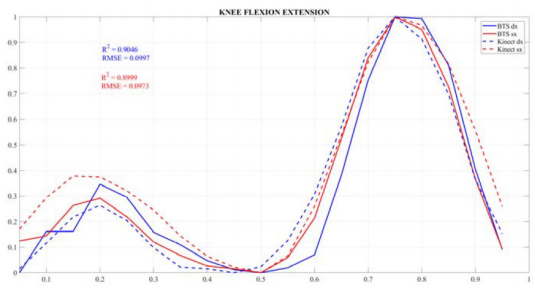

For each parameter the mean value across all the acquisitions has been reported. Figure 3 shows a representative plot of the signals computed for a specific joint angle (knee flexion extension) both for BTS system and for the proposed system. For each joint angle, the Root Mean Square Error (RMSE) and the correlation coefficient (R2 ) between the signals extracted with the two systems has been computed. Results showed that measurements provided by the two systems are comparable, as demonstrated by the high values obtained for R2 and, on the contrary, for the low values obtained for the RMSE (Figure 3).

Conclusion

A Kinect‐based, markerless and easy‐to‐use system for gait assessment has been presented and the accuracy of various gait parameters extracted by the proposed method as compared to commercial gait analysis systems has been evaluated. BTS system has certainly a lot of advantages: it can produce quantitative information about walking and posture, load anomalies and muscle failure, which would not be measurable with normal clinical exams; it can with the help of an operator identify the gait cycle events and produce a complete clinical report. On the other side, it is extremely operator‐dependent, both for the positioning of the markers and also for the post‐processing of the acquired data to create the final report. Furthermore, data analysis is time‐consuming and requires equipment which is not always available in clinical settings.

Similarly, Kinect has also advantaged and drawbacks. Its main limitation is the one camera against the 12 Vicon System Cameras.

On the other hand, it is an easy-to-use system; it does not require markers or any kind of preparation of the subject; it does not need cameras calibration. The experimental campaign demonstrates that the proposed system provides reliable measurements and present many advantages compared with the commercially available system “used in this study”. First of all, it is low‐cost, portable and easy‐to‐use; it does not require markers or any kind of preparation of the subject; it does not need cameras calibration, and finally it can automatically compute gait parameters in few seconds giving results that are comparable with the already used commercial systems. A limitation of this study is that it was conducted with just one subject, so further studies will involve many subjects in order to demonstrate the reproducibility of the results. Moreover, other kinematics and spatio‐temporal parameters, which are not computed by the proposed system, will be investigated.

References

- Barre A, Armand S (2014) Biomechanical ToolKit: Open‐source framework to visualize and process biomechanical data. Computer Methods and Programs in Biomedicine 114(1): 80‐87.

- (2019) BTS Bioengineering Gaitlab.

- (2019) Microsoft. “Kinect v2.”

- Cafolla D (2019) 3D visual tracking method for rehabilitation path planning. Mechanisms and Machine Science 65: 264‐272.

- Shmuel Springer, Galit Yogev Seligman (2016) Validity of the Kinect for Gait Assessment: A Focused Review. Sensors (Basel) 16(2): 194.

- Wang, Qiang, and Zhanhong Gao (2008) Study on a real‐time image object tracking system. In 2008 International Symposium on Computer Science and Computational Technology, pp. 788‐791.

- Preis J, Kessel M, Werner M, Linnhoff‐Popien C. (2012) Gait recognition with kinect. In1st international workshop on kinect in pervasive computing New Castle, UK, p. 1-4.

- Auvinet E, Multon F, Aubin CE, Meunier J, Raison M (2015) Detection of gait cycles in treadmill walking using a Kinect. Gait & posture 41(2): 722‐725.

- Eltoukhy M, Oh J, Kuenze C, Signorile J (2017) Improved kinect‐based spatiotemporal and kinematic treadmill gait assessment. Gait & posture 51: 77‐83.

- Otte K, Kayser B, Mansow Model S, Verrel J, Paul F, et al. (2016) Accuracy and reliability of the kinect version 2 for clinical measurement of motor function. PloS one 11(11): e0166532.

- Rocha AP, Choupina HMP, do Carmo Vilas Boas M, Fernandes JM, Cunha JPS (2018) System for automatic gait analysis based on a single RGB‐D camera. PloS one 13(8): e0201728.

- (2019) Vitruvius Kinect framework.

Research Article

Research Article