Impact Factor : 0.548

- NLM ID: 101723284

- OCoLC: 999826537

- LCCN: 2017202541

Birgitta Dresp-Langley*

Received: January 12, 2019; Published: January 25, 2019

*Corresponding author: Birgitta Dresp-Langley, Department of Cognitive Neuroscience, ICube Lab, France

DOI: 10.26717/BJSTR.2019.13.002429

Simulator training for image-guided surgical interventions allows tracking task performance in terms of speed and precision of task execution. Simulator tasks are more or less realistic with respect to real surgical tasks, and the lack of clear criteria for learning curves and individual skill assessment if more often than not a problem. Recent research has shown that trainees frequently focus on getting faster at the simulator task, and this strategy bias often compromises the evolution of their precision score. As a consequence, and whatever the degree of surgical realism of the simulator task, the first and most critical criterion for skill evolution should be task precision, not the time of task execution. This short opinion paper argues that individual training statistics of novices from a simulator task should therefore always be compared with the statistics of an expert surgeon from the same task. This implies that benchmark statistics from the expert are made available and an objective criterion, i.e. a parameter measure, for task precision is considered for assessing learning curves of novices.

Keywords: Surgical Simulator Training; Individual Performance Trend; Surgical Expertise; Precision; Speed-Precision Trade-Off Functions

Objective performance metrics [1-12] form an essential part of surgical simulator systems for optimal independent training. Putting such metrics to the test on users with different levels of expertise appears mandatory for perfecting existing systems. The exploitation of individual performance metrics to establish learning curves that a user can see and understand and that will help him/her elaborate task strategies for measurable skill improvement is the most important aspect of an effective training system [6-12]. Metric-based skill assessment ensures that training sessions are more than simulated clinical procedures, and that trainees are provided with insight about how they are doing in a task, and how they could improve their current scores. Not all simulator tasks are based on surgically realistic physical task models, but at the earliest stages of training surgical task realism is probably not what matters most [13-20]. Whatever the degree of realism of the simulator task, metric based skill assessment gets rid of subjectivity in evaluating skill evolution, and there is no ambiguity about the progress of training.

Moreover, some work has shown that benchmarking individual levels of proficiency against the performance levels of experts on a validated, metric-based simulation system has well-established intrinsic face validity [1,2,10]. It therefore appears the better approach compared with benchmarking on abstract performance concepts or on the basis of expert consensus. Building expert performance in terms of benchmark metrics into simulator training programs would provide an almost ideal basis for automatic skill assessment and ensure that desired levels of skill are defined on the grounds of realistic criteria. Such are, in principle, available in the proficiency levels of individuals who are highly experienced at performing clinical procedures with the highest level of precision [1,11], which is probably the strongest argument for building expert performance data into any simulator system for a direct comparison with novice data at any moment of the training procedure.

Recently, early simulator training models for assessing skill evolution on the basis of individual speed-precision trade-offs, recorded and directly exploited in the light of the benchmark statistics of an expert surgeon in the context of an experimental simulator environment, have been proposed [1,5]. The approach is based on a simple and universal psychophysical human performance model of individual strategies during motor learning [9,21-32]. They focus on individual speed-precision trade-off functions during training at early stages and are based on objective criteria for task precision and task time at any moment in the evolution of individual performance. The individual speed-precision trade-off functions of complete novices having trained for a large number of simulator sessions reveal, indeed, completely different speed-precision strategies. precision focused strategy most closely matches the benchmark statistics (mean and standard deviations for time and precision, of an expert surgeon, as shown previously [1].

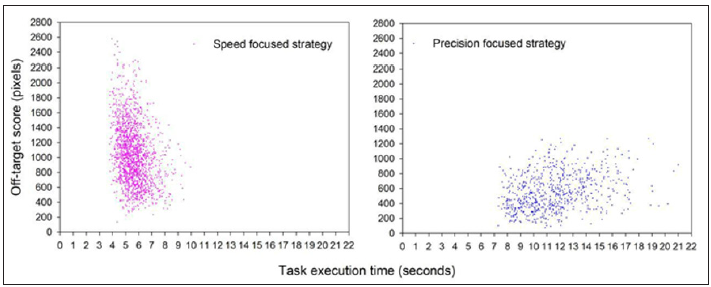

This is illustrated here in Figure 1 by two learning curves of two novice trainees from some of these studies [5], validated further in the light of an expert’s benchmark performance statistics. The strategy differences highlighted here in Figure 1 occur spontaneously in novices during early training and are most frequently completely non-conscious. In other words, trainees at these early stages of simulator training do not really know what they are doing, or what to do to improve their skills effectively. Therefore, it is in the very early stages of simulator training where the most effective guidance is required to bring out the full potential in trainees. How individual strategies should be detected, and if necessary, controlled for and modified as early as possible in simulator training, has been discussed on the principle of semiautomatic control procedures in the light of expert benchmark data [1,5]. A training system should be able to know the expert surgeon’s statistics relative to task precision and task time, not only time [20,21], and these data should be in-built to the system.

Figure 1:Two different learning curves expressed in terms of the individual speed-precision trade-off functions of two novices having trained in a large number of sessions.

On this basis, it will be possible to detect and if necessary, controls for individual speed-precision strategies by comparing a trainee’s performance statistics to the expert’s benchmarks. The means and standard deviations from the precision focused strategy closely match the performance benchmark statistics of an expert surgeon highly skilled in clinical precision interventions and performing in the simulator task with no prior training in that specific task, as shown by Batmaz, Dresp-Langley, de Mathelin, and other colleagues in some of their recent work [5-9].

Building reliable precision scores into learning curve approaches in surgical simulator training will ultimately enable the human expert tutors in charge of training programs to provide appropriate user feed-back to the novice when necessary and as early as possible in the program. The goal of early simulator clearly should be to empower, to bring out the full extent of individual potential and give any trainee, without the direct intervention of human tutors who even when they are experts may be biased, the possibility to attain the highest level of skill he/she is capable of on the simulator. This will ultimately result in fostering, and ultimately selecting for, strategy-aware soon-to-be surgeons with optimal precision skills.

How increasingly objective skill assessment will help improve surgical simulator training is still by and large an open question. Although artificial intelligence provides well-suited concepts for knowledge implementation, automatic feed-back procedures and the exploitation of prior (learnt) benchmark knowledge, building such procedures into simulator training is anything but straightforward. Early-stage “dry-lab” training programs are offered to large numbers of individuals, often on experimentally developed simulators, and supervision of the training programs by one or two experts is mandatory. Automatic control procedures [13] that exploit metric-based benchmark criteria and perform statistically driven performance comparisons, with trial-by-trial feed-back at any given moment in time, may prove helpful if they are exploited effectively. The goal of early simulator training should be to help the largest possible number of registered individuals reach their optimal performance levels as swiftly as possible [15,23]. Skill assessment in terms of an end-of-session performance status that highlights differences between trainees is not enough, especially when there is no way of knowing what these differences actually mean, i.e. what they tell us about true surgical talent.

Faced with the problem of defining reliable performance standards, it is important that simulator systems and the metrics they exploit to control performance evolution, including automatic or AI assisted procedures with feedback [22], have been validated by the performance parameters of an expert. This will ensure that the training criteria are likely to match those required for performing real surgical tasks, and that the learning task measures some of the most relevant characteristics of surgical skill. Since many different physical task models exist, surgical simulator training is permanently confronted with the problem of generalization of the learning curves and, ultimately, uncertainty about skill transfer to real-world surgical interventions.

The task models and control principles reviewed here and more extensively explained and conceptualized in previous work referred to herein should ideally be implemented at the earliest stages of “dry lab” simulator training. Some of them could be adapted to a variety of eye-hand-tool coordination tasks that allow for computer controlled criteria relative to task precision p at any critical task moment in time t. Early simulator training tasks should successfully tell apart the performance levels of a large number of novices from those of a surgical expert, not necessarily trained on the simulator but exhibiting a stable near-optimal performance with respect to task precision. Clearly, if an early training system satisfies this criterion, then it is indeed likely to measure critical aspects of surgical skill that should transfer to real surgical tasks [14-23]. Building precision scores into the learning curves early, should help produce a selection of trainees that will perform better later-on, in more specific tasks on physical models, and in the clinical context. Then, direct supervision by experts will allow taking individual skills to the next level.

Whatever the simulator system and task, be it surgically realistic or less, a single performance metric will inevitably give an incomplete assessment of user performance [1,11,20]. Task completion time in the absence of other, more telling criteria, is a poor and largely misleading measure of surgical skill evolution [19- 21]. Some metrics imply that there would be some global optimum performance value, such as a minimal tool path length, a minimal completion time, or other minimal quantities such as forces [7,22] or velocities [24,33-36] reflecting optimal performance. These supposedly optimal values, however, may vary in relation to changes in conditions, which need to be considered. The assumed optimum per se can, in reality, on be known through analysis of expert performance in the same task and on the specific simulator. Such analysis only will give insight into the nature of user-task-condition dependencies and, ultimately, help develop better simulators. Also, some important elements of surgical proficiency have not yet been explored to become part of largely unsupervised simulator training programs and the field is still in need of a large amount of experimental and conceptual work leading in that direction.

Metric-based criteria for task precision in limited task time are sometimes difficult to define. How, for example, does one measure the precision with which a surgical knot [15] is tied in a given time t? In procedures where the camera moves along with the tool [7,10-16], as is the case with most robot-assisted procedures, the frequency and duration of camera movements or camera movement intervals may be highly important indicators of technical skill. The ease with which the trainee controls the tool may be a direct correlate of the precision of tool-target alignment, for example. In combination with other performance metrics such as task completion time, economy of tool motion, or master workspace range used, a variety of precision measures may be exploitable, but have not yet been fully explored or validated. Training control procedures based on device-specific expert performance benchmarks and clear precision metrics will, sooner or later, provide new solutions to the old and still unresolved problem of heuristic validity of learning curves in surgical simulator training.