Abstract

Purpose: The aim of this study was to present a workflow for obtaining realistic 3D models of human faces, using enhanced tools and features of free software.

Methods: Faces of six (6) subjects of varying ages were digitized using monoscopic photogrammetry technology according to PlusID (+ID) methodology by combining smartphone captures and image processing through OrtogOnBlender addon, programmed through Blender®, an Open-Source Software in a PC. Alignment, resizing, unifying texture maps and attribution of multiresolution and displacement modifier tools were applied on the 3D models for the purpose of enhancing the 3D model to achieve more realistic features of the face.

Results: Resultant 3D models with medium-quality anatomic features were obtained as a first instance and were enhanced to produce high-quality resolution of enhanced realistic features and textures of the human face for all subjects. Facial anatomy could be reproduced in*.STL, *. OBJ and other file formats with no major irregularities.

Conclusion: The combined use of multiresolution and displacement features allowed us to increase mesh density & geometric detail, by using the gray scale images of the UV-mapped surface texture to displace the mesh surface of the digital model for more realistic representation of physical features of the human face.

Clinical implication: This workflow allowed us to obtain digital models with more realistic features of the face (compared to more conventionally prepared 3D printing files), to be used for digital analysis, prosthesis design and 3D printing purposes.

Keywords: Face Anatomy; Free Software; Freeware; 3D Modeling; 3D photogrammetry; Multiresolution; Displacement; Blender; Recap360; 3D Printing

Introduction

Digital technology and 3D modeling have been used in industry since the last midcentury to streamline the design and manufacture process, if users had access to adequate software, training, and equipment. Continuous improvement of available software and hardware allows for increasingly better and more efficient results from 3D technologies [1]. Anatomical study and digital reproduction of the human face facilitated the production of more realistic 3D models for many industries: gaming, filming, forensic science for identification & reconstruction, medicine for diagnosis, surgical treatment planning & device fabrication, facial prosthetics as well as numerous other fields & applications [2]. Digital surface scanning of the human face has been proposed since the 1940’s. CT-Scans, magnetic resonance imaging (MRI) and laser scanning devices have been instrumental for this purpose. More recently, stereophotogrammetry, monoscopic photogrammetry and structured light devices have provided a means to achieve this with the inclusion of information about skin color and skin texture, without radiation and without any risk of eye damage. Numerous systems are currently available on the international market (3DMD [3DMD® Georgia USA], Vectra [Canfield®, New Jersey USA], Artec [Artec 3D® California USA], even versions for smartphones and other mobile devices) [3-5].

While most systems have demonstrated accuracy, reproducibility, proportional accuracy, and have been sufficient for 3D printing, the smallest and finest details of the skin are generally not reproducible, providing limited realistic representation of facial features in the resultant 3D models [6]. The purpose of the present study was to present a workflow for processing 3D digital models of the human face, using tools and features of free software in order to achieve more realistic details and fine characteristics of skin and its texture.

Methods

Data Acquisition

Volunteer subjects accepted to take part of the study and were recruited for data capture of the face. Using an Android® smartphone (Asus Zenfone 2® Asus Inc., Taiwan-China), frontal face protocol of 26 total captures (13 captures & 2 heights) for monoscopic photogrammetry [7] was used to acquire 3D data of the faces of six (6) subjects of varying age and gender (mean age 46, range 27-68; 4 females, 3 males). “Plus ID (+ID)” workflow was followed. Subjects were instructed to maintain a static head position, close their eyes, remove hats, glasses, earrings, and other reflective accessories that might interfere with accurate data capture. Participants were also instructed to keep the mouth and lips closed during the photo capture sequence. Subjects were photographed seated with a uniform, contrasting, background color. Ambient was selected for a non-direct natural sunlight with no less than 1000lux, measured by a Light Meter smartphone app (Trajkovski Labs®). Positioning between subject and the operator was chosen according to minimize evident shadow effect over the face of the patient.

For scaling purposes, a single linear measurement of an anatomical reference was used (inter-alar distance of the nose). If unfocused images were found, the sequence was repeated. The completed capture sequence of twenty-six (26) images were uploaded to OrtogOnBlender addon, programmed by Cicero Moraes in Phyton for Blender®, an Open-Source Software for PC, using the OpenMVG+OpenMVS tool for 3D photogrammetry model creation and a computer powered by Intel i7 Linux Ubuntu 16.04 with 12 GB de RAM.

Preparation of digital 3D models and modifiers application

Alignment: 3D Models were aligned in a “front” view on the x-y-z axes. The position of the ears and eyes were evaluated in “side” views and aligned with the orthogonal function activated for this purpose. The Camper plane was aligned with the “ground” of the editing layer.

Area of Interest Selection: Areas beyond the head that were not of technical use or of interest regarding the face were erased using the tool Knife Project function from the “right” fixed point of view.

Resizing: Scaling was performed using the previously registered measurement from the static anatomical reference taken clinically from the subject (inter-alar nasal distance). Unification of texture maps: This step is usually done automatically by the OrtogOnBlender photogrammetry, but when two or more texture maps (*.jpg files) were created after the photogrammetry, they were joined by the “Bake” process in the Render tab. In that case, instead of having 2 or more “UV maps”, they are combined in one UV map. A unique *.jpg file.

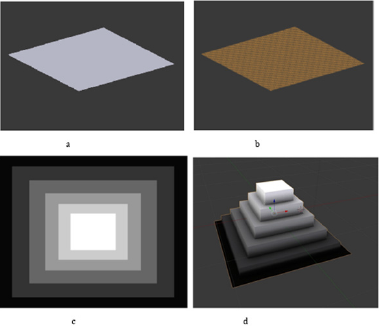

Assignment of “Multiresolution” & “Displacement” Modifiers: By selecting the *.jpg “UV map”, the mesh was subdivided using the “multiresolution” processing up to 3 times to increase the mesh density and geometric detail (Figures 1a & 1b). Following this, “displacement”, based on the gray scale of the texture map (“UV map”), was used with “strength” intensity level of 2, in order to optimize the level of detail of the anatomy over the mesh of the 3D model (Figures 1c & 1d).

Figure 1:

a. Simple square geometry of 1cm2.

b. Square geometries after the multiresolution applied 3 times in 1cm2.

c. Sample of a texture map over the 1cm2 square mesh.

d. Displacement modifier applied over the square mesh of 1cm2.

Evaluation

Resultant 3D models were compared to their non-modified versions for subjective comparison and objective analysis of the mesh, file size and geometries.

Results

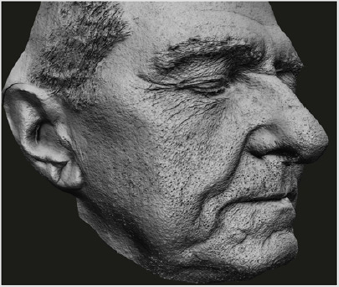

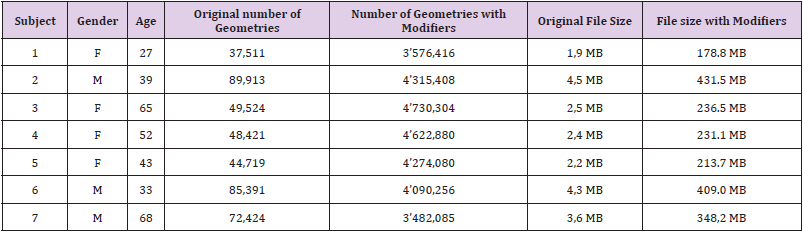

Faces of all subjects were successfully 3D digitized by monoscopic photogrammetry through a protocolized smartphone capture. Resultant 3D models of medium quality of realistic anatomic features on the mesh were able to be enhanced to produce very high-quality resolution and of realistic features and texture of the human face for all subjects. Following facial anatomy could be exported into *.STL, *.OBJ and other file formats with no major irregularities (Table 1, Figures 2-6).

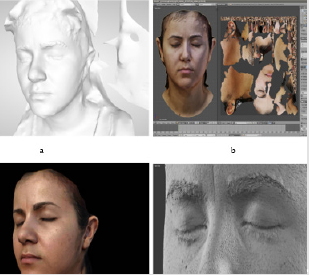

Figure 2:

a. Subject 1 as it is exported from Recap360® without texture map and before applying modifiers.

b. Subject 1 Texture maps unified and applied on the OBJ file.

c. Subject 1 OBJ file with texture map visualized.

d. Subject 1 with modifiers applied. OBJ file without texture map.

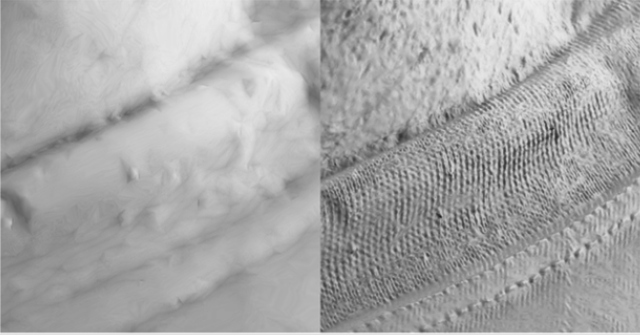

Figure 3: 3D model comparison of the neck and shirt of subject 2 without (left) and with modifiers (right).

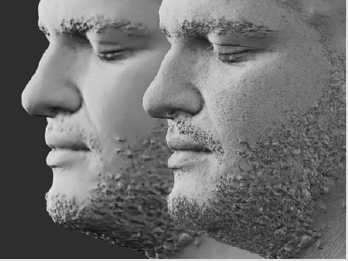

Figure 4: 3D model comparison of the peri-oral structures of subject 4 without (left) and with (right) modifiers.

Discussion

Since its creation in 1995, Blender® has been used in many industries, and more recently including medicine. [8]. More and more, biomedical professionals are utilizing add-ons programmed from Blender® for digital modeling and analysis, recognizing that it is essential that software tools for analyzing biological images have user-friendly interfaces and a reasonable learning curve [9]. The case of OrtogOnBlender is an add-on that combines most of the 3D technologies that could be useful for virtual surgery and prosthetic planning, since the data acquisition, 3D modeling up to prepare the model for 3D printing, like in surgical guide creations or like in PlusID methodology to create a prototype to optimize a final prosthesis [7]. In this study, we explored the computational capabilities of visualization and processing of the Add-On OrtogOnBlender for optimizing 3D models of faces. Our results demonstrated that this software functionality enables more efficient and high-quality rendering, modeling, visualization, and animation of volumetric data, significantly enhancing the output of 3D photogrammetry software [7,10,11]. Stereophotogrammetry, monoscopic photogrammetry and structured light processes can achieve a colored, volumetric model of the skin of faces with accuracy, reproducibility, and with proportional integrity.

However, without an appropriate texture map (UV map) which carries the color information of the surface, the fine details of the skin cannot be accurately and appropriately reproduced in the 3D model. Lacking accurate representation of the realistic features of skin, the resultant 3D printed models are limited in their practical value and clinical applications [4-6,12,13]. The Multiresolution and Displacement modifiers are native tools in Blender® that offer a manual but straightforward solution to enhance the detail of virtual 3D models. In (Table 1) we find that the multiresolution tool applied 3 times increased the number of geometries by a mean 75,12x (times) and range 47,9-95.4x (times). And the size of the files in MB were also multiplied by a mean of 95,6x (times), range 94,1-97,1x (times). This denser mesh allowed more geometries to be deformed by the sequential usage of the Displacement tool based on the corresponding texture map. The texture map is an image file that represents the color and surface detail of the subject’s skin. It is understood that the darker a line on the face appears in the texture, the deeper its concavity is. For example, if we display a photo in gray scale, shadows of minute details, skin pores, creases and folds are represented darker compared to the most superficial surfaces of the skin, which are represented by lighter gray values (Figure 6).

The displacement tool uses the gray scale values of the texture map, where black is the deepest and white the most superficial, to create a deformation pattern of this denser mesh to represent the detailed characteristics of the subject’s skin. Figures 2-6 demonstrate how the OBJ and texture map files can be optimized with the modifiers multiresolution and displacement to enhance the realistic features of the facial skin, for design and for prototyping purposes. This study’s intent was not to compare accuracy of physical anatomical reproductions from virtual 3D models, [5,6,11], as previous investigators have compared stereophotogrammetry methods with monoscopic photogrammetry through a smartphone method, and found no statistically significant difference between the two methods [14], The authors of the present study suggest a reproducible and predictable protocol for image capture when utilizing monoscopic photogrammetry [4,8]. In this study we defined a reproducible workflow for further processing of 3D models of the human face, using standardized protocols [7] and using native tools from graphic & computer science modifiers like multiresolution and displacement with OrtogOnBlender in order to achieve more realistic details and reproducible characteristics of skin.

Further studies will be necessary to keep evaluating this application in various industries, including clinical applications for healthcare.

Conclusion

The combined use of Multiresolution and Displacement features allowed us to increase the geometries of the mesh and to use the gray scale from the UV map of the texture to displace the surface of the digital model to more accurately represent realistic features of the human face. From monoscopic photogrammetry following the Plus ID (+ID) methodology, this workflow allowed us to reach digital models with realistic features of the faces which can be used for digital analyses or 3D printing purposes.

Acknowledgment

None to declare.

Conflicts of Interest

None to declare.

References

- Fletcher Nettleton K (2012) Cases on 3D Technology Application and Integration in Education. Information Science Reference p. 458.

- Kondoz A, Dagiuklas T (2015) Novel 3D Media Technologies.

- Bibb R, Eggbeer D, Paterson A (2015) Medical Modelling: The Application of Advanced Design and Rapid Prototyping Techniques in Medicine. Elsevier Ltd p. 516.

- Salazar Gamarra R, Seelaus R, Da Silva JVL, Da Silva AM, Dib LL (2016) Monoscopic photogrammetry to obtain 3D models by a mobile device: A method for making facial prostheses. J Otolaryngol Head Neck Surg 45(1): 1-13.

- Dindaroǧlu F, Kutlu P, Duran GS, Görgülü S, Aslan E (2016) Accuracy and reliability of 3D stereophotogrammetry: A comparison to direct anthropometry and 2D photogrammetry. Angle Orthod 86(3): 487-494.

- Artopoulos A, Buytaert JAN, Dirckx JJJ, Coward TJ (2014) Comparison of the accuracy of digital stereophotogrammetry and projection moiré profilometry for three-dimensional imaging of the face. Int J Oral Maxillofac Surg 43(5): 654-662.

- Salazar Gamarra R (2019) Al E Introdução à Metodologia Mais Identidade Proteses Faciais 3D com a utilização de tecnologias accessíveis para pacientes sobreviventes de cancer no rosto. In: E-book Comunicação Científica eTécnica em Odontologia . Ponta Grossa 251-272.

- Moraes C (2019) OrtogOnBlender: Documentação oficial [Internet]. 2nd (Ed.). Sinop: Cicero André Da Costa Moraes 1-126.

- Roosendaal T (2013) History of Blender.

- Blender (2020) Multiresolution Modifier. Blender 2.78 Manual.

- Blender (2020) Displace Modifier. Blender 2.78 Manual.

- Hsung TC, Lo J, Li TS, Cheung LK (2015) Automatic detection and reproduction of natural head position in stereo-photogrammetry. PLoS One 10(6): 1-15.

- Tzou CHJ, Artner NM, Pona I, Hold A, Placheta E, et al. (2014) Comparison of three-dimensional surface-imaging systems. J Plast Reconstr Aesthetic Surg 67(4): 489-497.

- Koban KC, Leitsch S, Holzbach T, Volkmer E, Metz PM, et al. (2014) [3D-imaging and analysis for plastic surgery by smartphone and tablet: An alternative to professional systems?]. Handchirurgie, Mikrochirurgie, Plast Chir Organ der Deutschsprachigen Arbeitsgemeinschaft für Handchirurgie Organ der Deutschsprachigen Arbeitsgemeinschaft für Mikrochirurgie der Peripher Nerven und Gefässe Organ der Vereinigung der D 46(2): 97-104.

Research Article

Research Article