Abstract

Psychological trauma and increased risk for suicide are a known occupational hazard for emergency medical services personnel. Technology now exists that can be drawn on by an artificial intelligence (AI) neural network to use contemporary machine learning techniques for the detection of psychological trauma and suicide risk. This conceptual paper outlines how such an AI network could be configured to receive and analyze data from multiple sources to save emergency responder lives.

Keywords: Psychological Trauma; Suicide; Paramedic; Emt; Artificial Intelligence

Abbreviations: Emergency Medical Services (EMS); Emergency Medical Technicians (EMTs); Posttraumatic Stress Disorder (PTSD); Computer-Aided Dispatch (CAD); Centers for Disease Control (CDC); Substance Abuse and Mental Health Services Administration (SAMHSA); Artificial Intelligence (AI); Electronic Patient Care Report (ePCR); Veteran’s Affairs (VA); Diagnostic and Statistical Manual of Mental Disorders (DSM-V); Employee Assistance Program (EAP); A Smart Phone Application (APP)

Introduction

With advances in artificial intelligence (AI) it is now possible to detect psychological trauma and suicide risk among emergency medical services (EMS) personnel-emergency medical technicians (EMTs) and their higher educated counterparts, paramedics. The purpose of this conceptual paper is to relay how such detection is possible through data and analysis mined from multiple information sources. This paper, while addressing the technological components, is not meant to delve much into specific mathematical formulas or programming code, rather it is a “10,000 foot” view of the problem and solution.

The Problem

In the U.S., posttraumatic stress disorder (PTSD) is present in

8-8.7% of the general population American Psychiatric Association

[CC1] [1]. Among paramedics and firefighters this range is much

more extreme with 4.34-30% of paramedics Alexander, et al. [2-

10] and 7-37% of firefighters Berger, et al. [11-12] suffering from

PTSD. These statistics are important because PTSD is among the

top four mental health conditions associated with suicide Bolton

[13]. According to the Centers for Disease Control and Prevention

[14], there were over 47,000 suicide deaths in the U.S. in 2017. In

fact, in that same year, there were more suicides than deaths due to

fires National Fire Protection Association [15], traffic accidents National

Highway Transportation Safety Administration [16] murders

Federal Bureau of Investigation, [17] or severe weather National

Oceanographic and Atmospheric Administration [18]. When it

comes to emergency medical responders, the rate of suicide deaths

is significantly higher than that of the general public Caulkins [19].

Husserl [20] developed the theory of phenomenology, that

is, events and situations in life are experienced differently from

person to person. A paramedic and EMT team may go to a call for a

22-year-old woman struck and killed by a vehicle. The paramedic

may overly identify with the tragedy because he has a 22-year-old

daughter of his own, whereas the EMT may be less affected because

she is single with no children. Everyone has their own individual

perfect storm of circumstances that result in psychological trauma or suicide Caulkins [21]. The formation of this perfect storm is a

series of chaotic and often non-linear events that a well thought out

AI can detect much easier Alexandridis [22]. The risk of incurring

psychological trauma with a higher likelihood of suicide is much

higher among EMS personnel that the public Caulkins [21]. Saving

those who save others is of paramount importance and is akin to

puting one’s own oxygen mask on before helping the person next to

them in a flight emergency. Something must be done, and AI holds

part of the answer.

Concept

My concept consists of an AI program, which is part of a system, that interfaces with internet databases, a smart phone application (app), physiological monitoring, electronic patient care reports (ePCRs) written after each patient contact, and the computer-aided dispatch (CAD) system. The AI uses knowledge of evidence-based risk factors to analyze data, thereby recognizing potential psychological trauma encountered by the individual EMS provider. The five components the AI draws information on include the following.

Internet Databases

In the U.S, there is a wealth of data collected on suicide and related phenomena from various governmental agencies. This includes, but is not limited to, information available from the CDC, state departments of health, the Substance Abuse and Mental Health Services Administration (SAMHSA), and the Department of Veteran’s Affairs (VA). Information available for retrieval includes suicide rates by state and county, crude mortality rates of suicide, methods of suicide, rates of suicidal thoughts, number of suicide attempts, and much more. All of this data can be sliced demographically by age, race, ethnicity, sex, etc.

App

An app is downloaded on to the EMS provider’s smart phone or

can be accessed via a tablet device or directly through an internet

web browser. The bulleted personal information below is collected.

The EMS provider enters data that is pertinent to themselves and

select information that is also about their family members. This

information is confidential and may only be seen by a non-employer

system administrator. EMS providers must have confidence in the

confidentiality of the system, or this major system component will

be jeopardized.

1. Adverse Childhood Event Details

2. Abuse History

3. Birth Date

4. Children’s Birth Date(s)

5. Criminal Victimization

6. Date Started in EMS

7. Education Level

8. Marital Status

9. Mental Health History

10. Physical Health History

11. Race/Ethnicity

12. Sex

13. Sexual Orientation

14. Significant Other’s Birth Date

15. Veteran Status

Additionally, after the EMS provider drops the patient off at the

hospital and goes back into service for another call, the app will

ask the EMS provider after the call (as known by referencing CAD),

what their level of distress is on a 0-10 scale, with 10 being the most

distressed. These linear numeric scales lend themselves well to

multiple linear regression statistical techniques, which are covered

more in depth later in this paper. The work partner or supervisor

of the EMS provider will also be able to notify their perception of

the EMS provider’s distress as well. This notification option could

also extend to close family members who note concerning behaviors.

There would be plausible deniability in that one would be

unable to know whether the AI algorithms picked up on distress independently

of others or whether other’s reports prompted the AI.

Physiological Monitoring

There is a variety of wearable technology that measures heart rate, respiratory rate, and even brain waves (electroencephalograms or EEGs) and circadian rhythms. EMS providers would be equipped with a form of wearable technology that collects this data. There are many devices including watches, waist bands, and head gear. When a person is stressed, respiration quickens, heart rate increases, and sleep cycles may be disrupted. Physiological monitoring is being used successfully in the military Friedel, et al. [23,24] and provides translatable results that can be applied to emergency responders.

ePCRs

Every time as EMS provider assesses or treats a patient, a patient care report (PCR) must be written. While there are likely some holdout ambulance services that have not gone to ePCRs yet, many services have made the investment. In the ePCR is a wealth of data on, the EMS crew’s actions, and the particulars of the incident. The ePCR consists of check boxes, numerical entries, and a narrative. Patient contacts will be considered on an individual and aggregate basis.

CAD

The computer-aided dispatch system (CAD) tracks calls for service, length of time on the call, special notes, etc. This may include law enforcement directions/requests, any warnings about the location or patient, frequency of 9-1-1 calls for EMS to the location and/or patient, EMS crew members responding, number of times the crew has encountered this particular patient, amount of time spent with a patient, and much more.

Artificial Intelligence Function

Overall, the job of the AI system is to pull data from the four

sources and synthesize and analyze the information in search

of evidence and probability of psychological trauma and/or risk

of suicide. The system assesses any time new data is introduced.

Psychological trauma is defined as any symptoms present in the

constellation of diagnostic criteria for any number of trauma related

maladies specified in the American Psychiatric Association’s

[1] Diagnostic and Statistical Manual of Mental Disorders (DSM-V)

but does not necessarily have to meet the threshold for formal diagnosis.

The objective is to recognizing compounding symptoms

before the psychological trauma reaches a formal diagnostic level.

This AI system is a digital health intervention, defined as “a discrete

functionality of digital technology that is applied to achieve health

objectives and is implemented within digital health applications

and ICT [information and communications technology] systems...”

World Health Organization [WHO] [25]. As such, continued compliance

with WHO guidelines is highly recommended.

Upon identification of potential psychological trauma or suicide

risk, the AI sends a private message to the EMS provider and another

designee. The designee may be a supervisor or person responsible

for mental health initiatives—this may include employee assistance

program (EAP) personnel or member of a peer support team.

The message to the EMS provider states, “We believe you may have

recently suffered some level of psychological trauma.” Resources

for mental health, coping strategy suggestions, and links to helpful

articles will accompany the message. The message received by

the designee will state, “There is reason to believe that [employee

name] has recently exposed to a situation with the potential for

psychological trauma. We recommend you touch base with [employee

name] as soon as practical.” This message will include information

on how have dialogue about psychological trauma, mental

wellness, and suicide. Also included will be referral numbers and

helpful website links.

Configuration

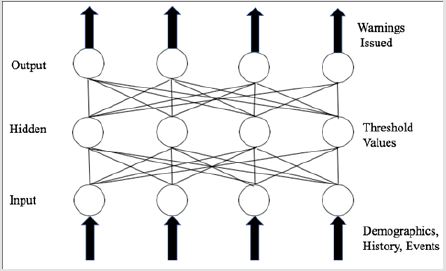

The AI is composed of a neural network, which is modeled on the human neurological system Alexandridis [22]. As such, this type of AI is capable of classifying information, learning, and fault tolerant-storage that confers protection from a failure in the system because of redundant back-up of information on many computers and devices Crump et al. [26,27]. When it comes to psychological trauma, a failure in the system could result in mortality or significant morbidity. This AI incorporates a wavelet neural network, which has three layers to it—the input, hidden, and output (p. 61). Explanatory variables are fed into the input layer, which are given a probability scoring in the hidden layer until reaching a threshold level as determined by the neural network, and then sent on to the output layer to produce the warning that psychological trauma may have occurred and/or there is an elevated risk of suicide.

Individual Case Analysis

Because of the uncertain and subjective nature of psychological trauma, the AI utilizes fuzzy modeling, which is based on a theory by Zadeh [28] that considers information that often, usually, or sometimes results in a particular outcome Doumpos [29]. This is combined with the concept of outranking whereby x is more likely than y to cause z Fodor, et al. [30,31]. I propose using outranking in that given v, x + y is more likely to cause z than y alone. We determine probabilities based on the evidence-based findings of researchers publishing on psychological trauma, suicide, and other related phenomena. The AI calculates using Bayesian logic, which works backwards, also referred to as backpropagation, from a potential given event—such as psychological trauma or suicide— and calculates the probability of various factors in causing that situation Ding Su, et al. [32,33]. Bayesian models have an evidencebased track record in proving the relative risk of suicide within geographic boundaries Mahaki, et al [34]. (Figure 1) is a graphic depiction of the neuro net configuration. Input is received from the data sources and sent to the hidden layer. At the hidden layer, Bayesian logic is applied and if the predetermined threshold is achieved, the input progress to the output layer to trigger the AI to act on the identified concern.

Aggregate Analysis

The AI will synthesize and analyze the aggregate data from all individual cases and search for trends that apply to the whole. This analysis will be used to inform threshold values in the hidden layer (Figure 1) of the neural network when the individual is first entered into the system. Until aggregate data is available, Bayesian logic will be set at a number reached by the expert consensus of suicidologists. Expert being defined as a person in the field who has studied, researched, and presented on suicide and related phenomena intensively for a minimum of ten years Ericsson [35]. This manual construction will be necessary until automatic learning from the Bayesian analysis becomes robust enough Horný [36].

Learning

Neural nets and connection weights between neurons are adjusted by means of backpropagation. Backpropagation provides respectable self-learning self-adaptation, and ability to generalize. Unfortunately, backpropagation has a poor rate of convergence, gets at minimum thresholds easily, and has problems processing complex or gradient information Ding, et al. [30]. For this reason, a genetic algorithm will be applied and incorporated with in the neural network. This algorithm searches globally through the information and performs an analysis on known outcomes entered into the system via the app or system administrator (p. 154). There has been success in utilizing a genetic algorithm and neural network together, which could very likely accelerate the AI learning process Stastny, et al. [37,38].

A series of logistic and/or linear regressions can be run to refine the probability matrices and self-adjust thresholds. For instance, a paramedic enters a 10 on a 10 scale of distress for a pediatric drowning call and at the same time it is noted by his wearable technology that his breathing and pulse rate increase 150% of his normal baseline. The system pulls the factors into a binary logistical regression and produces a probability unique to that paramedic and adjusts his threshold value for these types of calls. The dependent variable is a binary proposition, such as psychological trauma incurred or not, suicidal ideation or not, suicide attempt or not, and suicide death or not. Psychological trauma can be further parsed, such as compassion fatigue or not, acute stress disorder or not, and PTSD or not. The AI can also calculate a linear regression using the distress score, heart rate, or other linear data as the dependent variable. Potential factors are the independent variables. The purpose of the linear regression is to determine what factors are correlated to the numerical rise of the dependent variable Field [39]. In the way of an example, it may be that distress increased in proportion to time spent with a critically injured or ill patient.

Conclusion

Psychological trauma and suicide disproportionately affect those working in the emergency medical services field. Helping emergency responders will increase their chance of saving lives of the public and better prevent serious disability. An artificial intelligence system pulling from multiple sources of data can be an important tool in the rapid and early detection of psychological trauma and suicide risk in emergency responders. The optimal system is capable of the continuous and immediate analysis of the probability of dangerous EMS provider distress. The system utilizes expert consensus supported by research to establish initial parameters until it is capable of self-learning. The system monitors individuals and the workforce. AI can save those who save others, and the time to start is now.

References

- (2013) American Psychiatric Association, Diagnostic and statistical manual of mental disorders (5th). Washington DC Author, USA.

- Alexander DA, Klein S (2001) Ambulance personnel and critical incidents. British Journal of Psychiatry 178(1): 76-81.

- Bennett P, Williams Y, Page N, Hood K, Woollard M, et al. (2004) Levels of mental health problems among UK emergency ambulance workers. Emergency Medicine Journal 21(2): 235-236.

- Clohessy S, Ehlers A (1999) PTSD symptoms response to intrusive memories and coping in ambulance service workers. British Journal of Clinical Psychology 38(3): 251-265.

- Fjeldheim CB, Nöthling J, Pretorius K, Basson M, Ganasen K, et al. (2014) Trauma exposure, posttraumatic stress disorder, and the effect of explanatory variables in paramedic trainees. BMC Emergency Medicine 14: 11.

- Grevin F (1996) Posttraumatic stress disorder ego defense and empathy among urban paramedics. Psychological Reports 79(2): 483-495.

- Jonsson A, Segesten K, Mattsson B (2003) Post-traumatic stress among Swedish ambulance personnel. Emergency Medicine Journal 20(1): 79-84.

- Michael T, Streb M, Häller P (2016) PTSD in paramedics: Direct versus indirect threats, posttraumatic cognitions, and dealing with intrusions. International Journal of Cognitive Therapy 9(1): 57-72.

- Regehr C, Goldberg G, Hughes J (2002) Exposure to human tragedy empathy and trauma in ambulance paramedics. American Journal of Orthopsychiatry 72(4): 505-513.

- Streb M, Häller P, Michael T (2014) PTSD in paramedics and sense of coherence. Behavioral and Cognitive Therapy 42(4): 452-463.

- Berger W, Coutinho ESF, Figueira I, Marques Portella C, Luz M P, et al. (2012) Rescuers at risk: A systematic review and meta-regression analysis of the worldwide current prevalence and correlates of PTSD in rescue workers. Society of Psychiatry and Psychiatric Epidemiology 47(6): 1001-1011.

- Del Ben KS, Scotti J R, Chen Y, Fortson BL (2006) Prevalence of posttraumatic stress disorder symptoms in firefighters. Work & Stress 20(1): 37-48.

- Bolton J, Robinson J (2010) Population-attributable fractions of axis I and axis II mental disorders for suicide attempts: Findings from a representative sample of the adult, noninstitutionalized US population. American Journal of Public Health 100(12): 2473-2480.

- (2019) Centers for Disease Control and Prevention, National Center for Health Statistics. Leading causes of death National and regional 1999-2017 on CDC WISQARS Online Database [CC1].

- (2018) National Fire Protection Association, Fact sheet research: An overview of the U.S. fire problem [CC2].

- National Highway Traffic Safety Administration (n.d.) Fatality Analysis Reporting System (FARS) encyclopedia: National statistics [CC3].

- (2019) Federal Bureau of Investigation. (n.d.) Uniform crime reports: Murder [CC4].

- (2018) National Oceanic and Atmospheric Administration. Natural hazard statistics [CC5].

- Caulkins C G (2018) Suicide among emergency responders in Minnesota: The role of education [CC6].

- Husserl E (1913/2014) Ideas for pure phenomenology and phenomenological philosophy: First book - general introduction to pure phenomenology. Hackett Publishing Company Inc, Cambridge MA, USA.

- Caulkins CG (2015) Suicide: Quelling the perfect storm.

- Alexandridis AK, Zapranis AD (2014) Wavelet neural networks: With applications in financial engineering, chaos and classification. Wiley & Sons Inc, Hoboken NJ, USA.

- Friedel KE (2018) Military applications of soldier physiological monitoring. Journal of Science and Medicine in Sport 21(11): 1147-1153.

- Wyss T, Roos L, Beeler N, Veenstra B, Delves S, et al. (2017) The comfort, acceptability and accuracy of energy expenditure estimation from wearable ambulatory physical activity monitoring systems in soldiers. Journal of Science and Medicine in Sport 20(2): 133-134.

- (2019) World Health Organization, WHO guideline: Recommendations on digital interventions for health system strengthening [CC7].

- Crump G (2012) Fault tolerant vs. highly available storage [CC8].

- Johnston S J (2001) Fault tolerant file storage.

- Zadeh L A (1965) Fuzzy sets. Information and Control 8(3): 338-353.

- Doumpos M, Grigoroudis E (2013) Multicriteria decision aid and artificial intelligence: Links Theory and application. John Wiley & Sons Inc, West Sussex, UK.

- Fodor J C, Roubens M R (1994) Fuzzy preference modelling and multicriteria decision support. Berlin Germany.

- Roubens M (1997) Fuzzy sets and decision analysis. Fuzzy Sets and Systems 90(2): 199-206.

- Ding S, Su C, Yu J (2011) An optimizing BP neural network algorithm based on genetic algorithm. Artificial Intelligence Review 36(2): 153-162.

- Dowe DL (2010) MML hybrid Bayesian network graphical models, statistical consistency invariance and uniqueness. In Bandyopadhyay P S, Forster M R (Eds.). Handbook of the philosophy of science, Volume 7: Philosophy of statistics. Elsevier BV, Oxford, UK, pp. 901-982.

- Mahaki B, Mehrabi Y, Kavousi A, Mohammadian Y, Kargar M, et al. (2015) Applying and comparing empirical and full Bayesian models in study of evaluating relative risk of suicide among counties of Ilam province. Journal of education and health promotion 4(1): 50.

- Ericsson K A (1996) The acquisition of expert performance: An introduction to some of the issues. In Ericsson EA (Eds.) The road to excellence: The acquisition of expert performance in the arts and sciences, sports, and games. Erlbaum, Mahwah NJ, USA, pp. 1-50.

- Horný M (2014) Bayesian networks [CC9].

- Stastny J, Skorpil V (2007) Genetic algorithm and neural network. Proceedings of the 7th WSEAS International Conference on Applied Informatics and Communication Greece pp. 345.

- Suryansh S (2018) Genetic algorithms + neural networks = Best of both worlds [CC10].

- Field A (2013) Discovering statistics using IBM SPSS statistics (4th ). Sage, Los Angeles, CA.

Case Report

Case Report