Abstract

Objectives: There are many new, innovative approaches and techniques, as technological advances in the modern surgery, such as CAS, NESS and robotic surgery, but nowadays we also can use the „surgery in-the-air”. We can understand this newest approach as a „contactless surgery”, and as a part of adopting augmented and virtual reality for next generation of information technology and medical healthcare.

Methods: Our experience in using DICOM viewer and computer HD sensor device that supports hand and finger motions, to control the surgical system without touching anything, has definitely upgraded our understanding of the “human anatomical natural world” and enabled us to develop appropriate sensitivity and awareness of different coloration of normal and pathologic tissues in the pre/intraoperative diagnostics/surgical activities.

Results: In our experience, taking an innovative “virtual surgical ride” per viam topclass contactless-surgery system, through the human anatomy, is a completely nontraumatic, safe, and perfect starting point for exploring this surgical philosophy with many distinct and very pleasant anatomic neighborhoods of the human head, as we have already discussed.

Conclusion: From our point of view, contactless surgery in “non existing virtual space” today seems significantly different from what it was like just a few months ago, being the typical representative of the adopting augmented and VR for next generation IT and medical healthcare. We strongly believe that contactless “in the air” surgery is very special and fascinating, and it comes as no surprise that it keeps on being useful and attracts not only those who have already gotten to know it, but new professional medical users of the new era as well. Finally, it will help us rebuild our understanding of human orientation in the surgery and navigate very successfully through different anatomic tissues, as well as empty spaces throughout the surgical process, wherever it may occur.

Keywords: Gesture control; Artificial intelligence; Voice commands; Navigation surgery; Telesurgery; Augmented reality; Region of interest; 3D volume rendering; Leap Motion; OsiriX MD; Virtual endoscopy; Virtual surgery; Swarm intelligence

Abbreviations:

AI: Artificial Intelligence; AR: Augmented Reality; CA / CAS: Computer Assisted / Computer Assisted Surgery; DICOM: Digital Imaging and Communications in Medicine; FESS / NESS: Functional Endoscopic Sinus Surgery / Navigation Endoscopic Sinus Surgery; HW/SW: Hardware/Software; IT: Information Technology; LM: Leap Motion; MIS: Minimaly Invasive Surgery; MRI – Magnetic Resonance Imaging; MSCT: Multislice Computer Tomography; NCAS: Navigation Computer Assisted Surgery; OMC: Ostiomeatal Complex; OR: Operation Room; ROI: Region of Interest; RTG: Radioisotope Thermoelectric Generator; SWOT: Strengths, Weaknesses, Opportunities, Threats; VC: Voice Command; VE/VS: Virtual Endoscopy/Virtual Surgery; VSI: Virtual Surgery Intelligence; VR/VW: Virtual Reality/ Virtual World; 2D/3D: Two Dimensional/Three Dimensional; 3DVR: Three-Dimensional Volume Rendering

Introduction

There is a growing demand for IT and medical specialists that are able to develop complex IT solutions (such as medical and IT members of our Bitmedix team), with comprehensive knowledge in augmented and VR based medicine, which is mostly oriented towards clinical practice and education of medical students, providing VR based education in medicine and robot assisted surgeries and clinical, interactive, VR based exposure therapy tool [1], as well as our “on the fly” gesture-controlled incisionless surgical interventions [2]. This „learning process“ could be enabled by using different VR tools and devices, such as VR headsets displaying a particular environment to simulate a user’s physical existence in a virtual or imaginary setting, avatars that can be interacted with, such as in Houston Methodis [3] or in Stanford Neurosurgical Simulation and Virtual Reality Center [4], where surgeons in the BrainLab can use MRI imaging to map out an individual patient’s brain. This enables them to not only plan surgery but perform it, even in hard-to-reach places [3,4].

Immersive AR/VR technologies have been adopted in medical applications bridging the real and virtual world to amplify the realworld setting. One example of virtual embodiment in therapeutic neuroscience, where AR/VR technologies are redefining the concept of virtual tour is Karuna project [5]. This research connects VR and motion tracking using commercial VR headset. However, it uses 360-degree controller and headset for motion tracking that occupies your hands which makes it not adaptable to any kind of contactless surgery. Although there is myriad of AR/VR solution providers and different kind adoptions to different needs, there is no complete solution for touchless “in the air” surgery, as we already discussed [2,6]. Our initial step was to select distinguished existing solutions in order to adapt them to our augmented reality approach in CAS/NESS. On the other side, Hashplay project provides analytic structures that allow users to understand the context of data and act on them [7] to help make sense of complex systems in real time, but it’s mostly oriented on solutions for smart cities. Our AI solution should pull data from multiple sources, process them and visualize in straightforward way that enables touchless interaction with the system for the medical specialist [2].

One step forward in that direction is SpectoVive project form University of Basel [8] where dr Cattin enabled VR room that allows to discover anatomic model in a new way, it is possible to walk around the model, scale it, move it or just look inside out by using VR headset. In preparation of “in the air” surgery we use processing data using artificial intelligence. Medical specialists use a lot of time in preoperative planning to extract all important data from CT and MRI data formats. ROI is usually defined based on expert knowledge but is not automated. There are some existing approaches in system design for AI in virtual surgeries. One example is Virtual Surgery Intelligence (VSI) which represents a smart medical software for surgeons based on Mixed/Augmented Reality using artificial intelligence [9]. VSI surgery pack provides pre-, post- and intraoperative support for head & neck operations and pathology recognition and precise diagnostics. VSI enables simultaneous representation of rendered bone and soft tissue which is really beneficiary for preoperative planning and intraoperative comparison of 3D CT and MRI scans with increased precision by overlaying cranial nerves and blood vessels.

Touch-free operations on medical datasets are achieved through voice commands and in our approach [2,10] we would like to extend this with motion tracking which enables more precise virtual movement, rotation, cutting, spatial locking and measuring as well as slicing through datasets. Global technological change will be immersive towards automatization/computer assisted/robots, of different aspects of our lives, specifically for an aging society burdened with increased healthcare spending. Current needs in Computed Assisted Surgery (CAS) methods exceed the contemporary educational limits. In our augmented reality approach, we would like to demonstrate how to design spatial anatomic elements, form both IT and medical perspective. Augmented spatial anatomic elements simultaneously combined with use of 3D medical images and 4D medical videos, combined with touchless navigation in space through such complex data should enable higher intraoperative safety and reduce operating time [10]. In preparation of any kind of surgery, medical specialist must provide most precise models of the part of the body that will undergo the surgery [11]. In such cases using AR/VR solutions will diminish differences between real world and simulation by using VR headsets and touchless motion trackers.

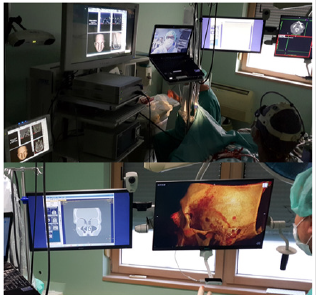

Thus, technology should deliver productivity advances which need capable interdisciplinary developers in the fields of, among others, IT and medicine that would help in shaping in the educational approach. This project of „contactless non-invasive sinus surgery in rhinology” [2,6,10], once completely realized, would help overcome current trends of increasing technological change, because by adopting different approaches and frameworks in such distributed, augmented virtual labs, physicians/surgeons could adapt to new technologies much faster with less stress in any OR, enabling an easier transition from the stages of education to the workforce/ OR. This newest „medical strategy“ could be an important step to enhance surgeons’ capacities and increase their overall success because the real and virtual objects definitely need to be integrated in the surgical field [12](Figure 1), for a number of reasons, such as orientation in the operative field where ‘overlapping’ of the real and virtually created anatomic models is inevitable [13-15].

Figure 1: Examples of medical data analysis in the real time with enabled virtual reality in order to help the surgeon in decision making during the surgery. We used OsiriX MD (DICOM viewer, certified for medical use), Leap Motion (computer hardware sensor device that supports hand and finger motions as input), and our specially designed software that integrates Leap Motion controller with medical imaging systems.

Our team has long been the leader of advanced surgery in otorhinolaryngology-head and neck surgery (3D-CAS/1994 [16,17], tele-3D-CAS/1998 [18,19], touchless “in the air” surgery/2015 [2,6,10]). Is also the today’s team of contemporary innovative surgery, concerning the highest quality level, the highest level of user experience in the daily routine practice, which use appropriate DICOM images and “gesture motions” viewer industry? Our newest project proposal [2,6,10] aims to enable further shifting of such educational and clinical frontiers based on well-known solutions from VR, while involving more experts from different scientific fields that could shape the research and educational process in different, interesting ways, as for example medical diagnostics, surgery “in the air” in virtual world that are simulated and modeled before the real surgery [6,20-22], as well as surgeries in virtual anatomic surroundings that are assisted by 3D-digitizer with six degrees of freedom [11,23-26] (Figure 2).

Figure 2: Involvement of more experts from different scientific fields in preoperative analysis/teleanalysis in virtual surroundings by using of motion tracking camera for navigation through the data. We used voice command for easier contactless navigation through the application (3D anatomy of the patient’s head.

That is why we want to shift this illusion of reality in the OR that is produced by VR perception/tools on the next level (such as computer assisted navigation with 3D-surgical planner or AR in the OR with contactless ‘in the air’ surgeon’s commands) adding a comprehensive interdisciplinary overview, knowledge, and experience. That is why our project consortium consists of various ITs, medical as well as multimedia experts for design of AR applications. This could be a good starting point in setting the benchmark and defining additional research in the future related learning/practice [27] outcomes with adding VR component that would shift this education paradigm to the next level in human medicine/surgery.

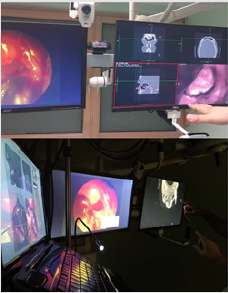

More specifically, for this research on shifting VR experience in our education/teleeducation and surgical/telesurgical [12,18] practice (as seen in Figures 1&2), we would need to equip VR laboratory and to develop different SW based AR scenarios that would enable above mentioned aims of creating the comprehensive and interdisciplinary environment in OR (IT in medicine) (Figure 3). Even more, in our future plans, MSCT and MRI datasets can be displayed in 3D using the AR/VR headset. The operating surgeon can move them, rotate them, and even move around them. Additionally, the surgeon can ‘enter’ the 3D models, and view the pathology from all angles based on touch-free surgeon’s commands which makes even the most delicate of rhinology structures tangible and visible in rich details [4], as already seen in the Stanford Neurosurgical Simulation and Virtual Reality Center.

Figure 3: Developing different SW based AR scenarios based on the exisiting medical datasets enhanced with virtual reality in order to enable comprehensive and interdisciplinary environment in OR.

Material and Methods

Case Report

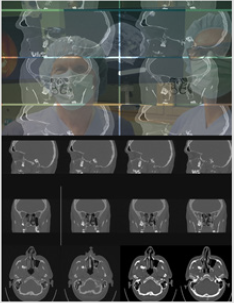

Figure 4: Telemed discussion about follicular cyst which heads out of the lateral wall of the maxillary sinus, with navigation through the frames in medical datasets in real time.

An adult male patient, Caucasian, NM, 57 years old with test results suspecting of a follicular cyst which spreads out to the lateral wall of the maxillary sinus (Figure 4). The cyst was discovered during a routine radiology exam (MSCT nose/sinuses). It is big in size and is causing a deformity of the lateral wall of the maxillary sinus. These kinds of cysts are usually asymptomatic, but with the presence of a potential infection, the patient responds with pain on palpation of the lateral wall of the maxillary sinus (typical somato-senzoric feeling of pain to pressure, touch, vibrations etc.). Our patient, who with earlier mentioned test results also had an obstruction of the nasolacrimal ductus, has adduced a similar kind of pain and headache.

It has been known that odontogenic cysts are of characteristic appearance and that they never penetrate the maxillary sinus, but rather, with their growth, move sinus walls and compromise him in that way. Radicular cysts are the ones to be diagnosed most often because they can raise the bottom of the maxillary sinus itself, while keratocysts and follicular cysts are rarely diagnosed. They can deform the posterior and lateral walls of maxillary sinuses while creating an image like duplicator of the sinus. They are usually created after the making of a calcified crown bound to wisdom teeth – like it was with our patient (tooth in permanent dentition /18), but they can also be corelated with canine teeth as well as with other premolars of the upper jaw. They’re usually showed radiologically as ‘’unilocular lighting with the crown and the root of mentioned tooth’’. In case of persisting inflammation (chronic inflammation) ameloblastoma can occur from bigger cysts with possible future growth of mucoepidermoid carcinoma as well as plate cells carcinoma. A follicular cyst can cause pathological jaw fractures.

It is well known that the pathology of the maxillary sinus of odontogenic origin is extremely important in rhinology, primarily because of close anatomical contact between the roots of upper teeth which can, in an inflammatory process, cause a sinus inflammation. With parodontitis and odontogenic cysts, iatrogenic factors are a relatively often cause of maxillary sinus inflammations and they occur after tooth treatment. Oroantral communication with the associated fistula presents the leading iatrogenic cause, and it occurs by tooth extraction which is an everyday procedure in dental clinics. Therefore, with extra attention from dentists (a positive Valsalvin test), every oroantral communication can be sanated and with that it won’t, with a chronic inflammation, come to forming an oroantral fistula.

Therapeutical approach: It should be emphasized that there isn’t a possibility of preventing the emergence of follicular cysts of maxillary sinus. Therapy is primarily based on the need of sanating the cause of the inflammation and then the application of the antibiotics. After that comes treating the sinus itself with usage of appropriate surgical methods. Of course, it should be borne in mind that the inflammation of the maxillary sinus can occur spontaneously and the reduction and remodeling of the maxillary sinus can happen with the presence of odontogenic lesions. The choice itself of surgical treatment of the odontogenic lesions depends on their position as well as their size. In the case of our patient, NM, the choice of surgical treatment was intraoral enucleation of the lesion. It could have been done per viam with Caldwell-Luc’s type of operation through fossa canine or, as in our patients case, with the application of osteoplastic contactless surgery of the sinus with necessarily disposing of the tooth. Of course, it is more than necessary to fully remove all the pathological anthral tissue. The described contactless surgical approach is chosen because this form of pathological substratum cannot be sanated with the minimally invasive approach and/or with medication therapy.

OsiriX MD

Working with different 3D-ROI segments generated from 2D-computed tomography data, we could use different tools in the OsiriX DICOM viewer, such as polygon, brush, pencil, and other similar shapes enable precise selection of segments, whereas the more sophisticated 2D/3D grow region tool can automatically find edges of the selected tissue by analyzing surrounding pixel density and recognizing similarities [2]. Additionally, in our previous activities, we used LM sensor as an interface for camera positioning in 3DVR and VE views, with speech recognition as a VC solution, and for this purpose, we developed our special plug-in application for OsiriX platform [6]. The navigation in virtual space is achieved by assigning three orthogonal axes, to the camera position control [2]. In 3DVR, the Y axis is translated to vertical orbit, the X axis to horizontal orbit, and the Z axis to zoom [10]. The VE gains acceleration based on difference in the value of the Z starting and ending position, and horizontal and vertical camera turning is assigned to the X and Y axis, respectively [2,6,10].

Our Contactless-Hand-Gesture Non-Invasive Surgeon- Computer Interaction

One of the first steps in planning the surgery is data acquisition. The concept here is to transform the 3D imaging into a single patient model by using diagnostic devices like CT or MRI. Once having the patient data, by using the VR techniques and depth and tracking cameras, it is possible to analyze and segment the region of interest in pre-operative preparation. Using of depth and tracking camera (Intel RealSense D-400 Technology) enables an operation to be simulated, and the outcome viewed and analyzed before the patient undergoes surgery in the same environment like in the real surgery. This enables medical specialists to optimize their surgical approaches, with obvious advantages for patients and healthcare providers. Later during the surgery, the same techniques are used for navigation and helping the surgeon to visualize and analyze current performance of the surgery. Moreover, it is possible to simulate the complete surgery from the surgeon’s field of vision (Figure 2), and to see what might happen by removing or cutting some region of interest and to prepare the medical specialist what to do in key situations from different view angles, giving us completely new opportunities for diagnostics and surgical planning.

Contactless Surgery” and VR Applications in Medicine

Nowadays, the applications of VR in medicine are tremendously growing. With different application of VR glasses and other VR devices, it is possible to create an illusion of any kind of a realworld application. This could be using of VR techniques for surgical planning or training [6,10], using the VR equipment such as the simulators for navigating during the minimal invasive surgeries [28], where force-enabled virtual guidance is used in roboticsassisted surgeries, but here we would like to refer to VR techniques for contactless surgery applications based on the usage of motion tracking camera.

Our camera for depth and motion tracking

In order to provide the most immersive experience, the fidelity of the equipment may need to be tailored to surgical simulation. That is why we use camera for depth and motion tracking (Intel RealSense D-400 Technology) that has active stereo depth resolution with precise shutter sensors for up to 90 FPS depth streaming with range up to 10 meters which is important in the OR and which gives a sense of freedom to the surgeon during the surgery and in preoperative planning (surgeons can “fly” through the patient’s head on screen [4]). Depth camera system uses a pair of cameras referred to as imagers to calculate depth. This system uses identical cameras configured with identical settings. Stereo camera visualizes the object by looking at the same subject from slightly different perspectives giving it the depth dimensionality. The difference in the perspectives is used to generate a depth map by calculating a numeric value for the distance from the imagers to every point in the scene. In order to use the depth and motion tracking camera properly, we needed to define some standard moves of the surgeon’s hand to speed up the process of motion and depth recognition and to have the more precise real-time outcomes. With this approach, we can achieve our contactless surgery [2,6,10] by using the controls of depth and motion tracking cameras and with fully operable list of virtual movements reflecting standard moves through patient datasets, as for example; rotation, cutting, spatial locking, measuring and easy slicing with virtual movement and medical modelling accuracy.

Discussion

Quantifying everyday life in a medical setting? A future in which telepathy will be made possible by connecting our brains to the ‘cloud’ (through the Internet)? Thinking as communication method in medicine? [29] In the near future, we will certainly have an incredible opportunity to share ideas, but would we get the “live” universal database, i.e. a “collective mind”, similar to the creation of a computing cluster resulting from the merging of a computer, with a “mind” located in a computing digital environment which allows better connection and communication than the currently available biological mode?

If in today’s real world, without touching the screen during “in the air” contactless navigation surgery [29,10,30], if we enable “virtual listening and sensing” [2] of 3D spatial data in the world of virtual diagnostics [31,32] and surgery [10], which does not exist in reality [19], we will form a reaction of a human being (a surgeon) who thinks differently [29], has impulses and certain tendencies, -as a result, we define a completely new creative sense of understanding space and self-awareness. Of course, we are still not yet at the level of the brain-computer interface, but we are certainly able to use technology that can make invisible, visible to doctors/surgeons, i.e., to experience and understand what we cannot realistically see4, or what does not really exist. In this way, we “understand” the three-dimensional spatial relationships of the operating field as it is in human beings, that is, “hear what is otherwise silent to us.” When this will be fully achievable, we will surely quickly define a scenario of an universal language.

That is why our research project proposal in the field of contactless surgery would also go in the direction of monitor learning and practical experience, as well as surgeons’ emotions while resolving gamified problems in the OR, and comparing preoperative planning outcomes with outcomes during the real surgical activities produced by contemporary education and previous planning process, as we have already previously published [2,6,10]. By enabling this new approach, we aim to achieve a sustainable environment for developing skills that physicians/surgeons would need in the future.

If we would like to develop the medicine of the 21st century, we have to be more concise in order to highlight the novel methodology proposed with the human-machine interaction (VR) and prove why it is more convenient in the diagnostics, surgical [33] and telesurgical procedures [34] , such as in our daily ENT clinic, with which we were faced with many times. Of course, VR is not a new technology. It is already widely used in different aspects of our everyday lives. AR as an extension of VR is also well known and widely applied in medicine and the broader healthcare industry. Current trends in medicine are mostly related to applying different disruptive technologies to enhance and support the decisions of medical experts. The message from the Stanford Neurosurgical Simulation and Virtual Reality Center4 very clearly indicates that “VR can assist how they can approach a tumor and avoid critical areas like the motor cortex or the sensory areas. Before they did not have the ability to reconstruct it in 3D, which resulted in them having to depict it in their minds [4].

This is one more reason why our research proposal of “contactless noninvasive ENT sinus surgery”, as previously published [2,6,10], would go in the direction of diagnostical/surgical applications, as well as for monitoring learning and practical experience and surgeons’ emotions, all the while resolving gamified problems in the OR, and comparing pre-operative planning outcomes with outcomes during the real surgical activities produced by contemporary education and previous planning process. If we are to look at this article from a critical point of view, it must be said that the title of this article is phrased as a question, without any details of the article’s content. The reader sometimes tends to think that it would be better when the title would state the key topic or concepts which the article is aiming to express.

Likewise, the authors of this article have come up with a demanding concept for the development of “surgery of the future”, based primarily on contactless diagnostics and/or surgery [2], simulation of surgery in a non-existent virtual world [6], application of navigation robotic systems in virtual operations before actual operations [10], etc., which leads to sometimes making this article quite difficult to read smoothly, and to understand the concepts and conclusions the authors are trying to make. Also, the reader may sometimes get lost navigating specific sentences and therefore, must search to recognize the key concepts.

Additionally, the supplied case report is not written in a standard way in which case reports are written in the medical literature and adds a little something to the article, with no later discussion tied back to the case report itself. We agree that this “remark” is also justified, especially since this case report is very rare and may deserve a more comprehensive presentation and analysis. Nontheless, we would like to remind that the basic idea of this paper was not a more detailed analysis of the case report presentation, but rather describing the current state of understanding of the concept (contactless surgery) which is to be discussed in the article, as well as the void in bliterature this article serves to fill.

Project flow

a. Based on the surgeons’ feedback we will improve the overall system by researching and adding additional features like application of augmented touch screen, voice control and intelligence feedback in OR with the application of HD and SW solutions that enable the surgeon to touch free 3D-simulation and motion control of 2D and 3D medical images as well as VS in real time.

b. That is why we would need at least a couple of medical institutions to continuously monitor all impacts on surgeons’ perception, focus, orientation and movement in the real world in the correlation to the virtual environment and vice versa.

c. With the development of VR lab/labs, we will collect high volumes of personal data, which will be stored in a maximum secured way, to make our own data collections and , and based on these data, we will improve further features of augmented and VR deliverables, which will be based on local surgeons’ needs and it will be international on the level of the joint VR lab (Figure 5).

d. Finally, we predict that such technology-oriented education and/or medical practice will impact the global labor market when offering next generation of highly-skilled IT and medical specialists/surgeons, including their cooperation as well as tele-assistance (as seen in Figure 6). By enabling this new approach, we aim to achieve a sustainable environment for developing skills that physicians/surgeons would need in their OR in the future; (e.g. “additive manufacturing of medical models” [38], CAS, NESS, and touch free surgeon’s commands- as “biomechanics” of the new era in personalized contactless hand-gesture, non-invasive surgeoncomputer interaction) as methods which exceed the contemporary educational and clinical limits.

Figure 5:Process of designing spatial anatomic elements, from both information technology and medical perspective. Using absolute value of hand position in the sensor coordinate system as a deflection of camera angles requires additional space and involves considerable amount of hand movements[2].

Conclusions

a. We presented the use of standard commercial hardware (Apple Mini Mac) and SW (DICOM viewer SW and SW for handgesture non-invasive surgeon-computer interactions, with our recently created original plug-ins) to create 3D simulation of medical imaging („different tissues in different colours“12) and touchless navigation/manipulation of images.

b. It is intended to be useful in the OR setting, where noncontact navigation methods would be of most benefit.

c. We propose this technology to study the anatomy of the preoperative patient and perform “incisionless” surgery in a simulated environment.

Figure 6: Application of virtual reality / augmented reality techniques in planning the surgery. The 3D-virtual reality and virtual endoscopy views could then be used uninterrupted and unburdened with unnecessary data when not required, independently of which application environment and settings were used2. Such dashboard could contain the most important functions and parameters concerning the current virtual reality10 or virtual endoscopy context[10].

d. Moving beyond medical frontiers per viam AR, we demonstrated how to design spatial anatomic elements, from both IT and medical perspective [2,6,10,11,19] (“the surgeons practice the procedure using images of an actual patient, rather than generic anatomy, allowing them to map out the exact path they will take during the surgery, ahead of time“ [4], as seen in the Figure 5.

e. The use of augmented spatial anatomic elements simultaneously combined with use of 3D medical images, combined with touchless navigation in space through such complex data enabled higher intraoperative safety and reduce operating time.

f. With above-mentioned methods, we will track surgeons who will use personal-3D-navigation systems (as we did/1994 [15,16]) with on the fly gesture-controlled incisionless surgical interventions, which is currently in our use [2].

g. The use of contactless surgery will show us how surgeons will cope with these state-of-the-art technologies, as mentioned previously [34,35], to track easy-to-use and to educate them in virtual environments if necessary.

h. Application of this new skills in medicine/OR, will show surgeons are as close as possible to real-life applications in medicine of the 21st century (such as robotic surgical procedures [37]), “by immersing themselves in 3D-views of their patients’ anatomy, doctors can more effectively plan surgical procedures” [4] (Figure 7).

Figure 7: Proposal of our VR and/or tele-VR lab for medical practice. For our team, this routine preoperative[38], as well as intraoperative procedure in our operation room, which enabled very precise and simple manipulation of virtual objects with the sense of physical presence at a virtual location[2,6], represents a more effective and safer endoscopic and virtual endoscopy procedure in our hands[10], in comparison with some ‘standard’ endo-techniques.

i. Is this making a universal worldwide center of medical knowledge for contactless diagnostics and MIS surgery in the VR world possible, which is based on “collective mind” by creating centralized consciousness through the hyper connection of an infinite number of data sources? [39] This proposal is going towards forming the Swarm Intelligence, a newest theory developed from observation of animal behavior (ants, bees, butterflies) which led to the development of swarm intelligence applications) [40], but we need to know that there is a significant difference in this comparison with the knowledge of swarm intelligence that is decentralized. Conversely, by connecting to a “collective mind”, we will be able to share all knowledge and skills from a centralized source41 because all minds will work together.

The presented ideas for the development of the previously described system for ‘gesture-controlled incisionless surgical interventions’ are presented in our next scientific paper entitled “Do we have biomechanics of the new era in our personalized contactless hand-gesture non-invasive surgeon-computer interaction?”

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Acknowledgment

The authors are grateful to Professor Heinz Stammberger, M.D.(†), Graz/Austria/EU, for his helpful discussion about NESS and contactless surgery (February/2018); Dr. Armin Stranjak, Lead software architect at Siemens Healthcare, Erlangen, Germany, EU, for his discussion about MSCT and MRI nose/sinus scans.

References

- University of Southern Carolina, Institute for Creative Technologies: Medical Virtual Reality.

- Klapan I, Duspara A, Majhen Z, Benić I, Kostelac M, et al. (2017) What is the future of minimally invasive surgery in rhinology: marker-based virtual reality simulation with touch free surgeon's commands, 3D-surgical navigation with additional remote visualization in the operating room, or. Front Otolaryngol Head Neck Surg Global Open 1(1): 1-7.

- Houston Methodist Hospital -Texas Medical Center-Brainlab.

- Stanford Neurosurgical Simulation and Virtual Reality Center.

- European Strategy and Policy Analysis System: Global Trends to 2030: Can the EU meet the challenges ahead.

- Klapan I, Duspara A, Majhen Z, Benić I, Kostelac M, et al. (2019) What is the future of minimally invasive sinus surgery: computer assisted navigation, 3D-surgical planner, augmented reality in the operating room with 'in the air' surgeon's commands as a "biomechanics" of the new era in personalized contactless hand-gesture noninvasive surgeon-computer interaction? Biomedical J Scientific Technical Research 19(5): 14678-14685.

- QuantumRun: State of technology in 2030 | Future Forecast.

- Top Mental Health Challenges Facing Students.

- Klapan I, Duspara A, Majhen Z, Benić I, Trampuš Z, et al. (2019) Do we really need a new innovative navigation-non-invasive on the fly gesture-controlled incisionless surgery. J Scientific Technical Research 20(5): 15394-15404.

- Klapan I, Raos P, Galeta T (2013) Virtual Reality and 3D computer assisted surgery in rhinology. Ear Nose Throat 95(7): 23-28.

- Klapan I, Vranješ Ž, Prgomet D, Lukinović J (2008) Application of advanced virtual reality and 3D computer assisted technologies in tele-3D-computer assisted surgery in rhinology. Coll Antropol 32(1): 217-219.

- Knezović J, Kovač M, Klapan I, Mlinarić H, Vranješ Ž,et al. (2007) Application of novel lossless compression of medical images using prediction and contextual error modeling. Coll Antropol 31(4): 1143-1150.

- Frank-Ito D, Kimbell J, Laud P, Garcia G, Rhee J (2014) Predictingpost-surgery nasal physiology with computational modeling: current challenges and limitations. Otolaryngol Head NeckSurg 151(5): 751-759.

- Klapan I, Šimičić Lj, Rišavi R, Bešenski N, Bumber Ž, et al. (2001) Dynamic 3D computer-assisted reconstruction of metallic retrobulbar foreign body for diagnostic and surgical purposes [Case report: orbital injury with ethmoid bone involvement]. Orbit 20(1): 35-49.

- Klapan I, Šimičić Lj, Bešenski N, Bumber Ž, Janjanin S, et al. (2002) Application of 3D-computer assisted techniques to sinonasal pathology-Case report: war wounds of paranasal sinuses with metallic foreign bodies. Am J Otolaryngol 23(1): 27-34.

- Klapan I, Vranješ Ž, Prgomet D, Lukinović J (2008) Application of advanced virtual reality and 3D computer assisted technologies in tele-3D-computer assisted surgery in rhinology. Coll Antropol 32(1): 217-219.

- Klapan I, Šimičić Lj, Rišavi R, Pasari K, Sruk V, et al. (2002) Real time transfer of live video images in parallel with 3D-modeling of the surgical field in computer-assisted telesurgery. J Telemed Telecare 8(3): 125-130.

- Klapan I, Vranjes Z, Prgomet D, Lukinović J (2008) Application of advaced Virtual Reality and 3D computer assisted technologies in computer assisted surgery and tele-3D-computer assisted surgery in rhinology. Coll Antropol 32(1):217-9.

- Tan JH, Chao C, Zawaideh M, Roberts AC, Kinney TB (2013) Informatics in Radiology: developing a touchless user interface for intraoperative image control during interventional radiology procedures. Radiographics 33(2): E61–70.

- Tan JH, Chao C, Zawaideh M, Roberts AC, Kinney TB (2013) Informatics in Radiology: developing a touchless user interface for intraoperative image control during interventional radiology procedures. Radiographics 33(2): 61–70.

- Hötker AM, Pitton MB, Mildenberger P, Düber C (2013) Speech and motion control for interventional radiology: requirements and feasibility. Int J Comput Assist Radiol Surg 8(6): 997–1002.

- Klapan I, Raos P, Galeta T, Kubat G (2016) Virtual reality in rhinology – a new dimension of clinical experience. Ear Nose Throat 95(7): 23-28.

- Zachow S, Muigg P, Hildebrandt T, Doleisch H, Hege H (2009) Visual exploration of nasal airflow. IEEE Trans Vis Compute Graph 15(6): 1407–1414.

- Yael Kopelman, Raymond J Lanzafame, Doron Kopelman (2013) Trends in evolving technologies in the operating room of the future. JSLS 17(2): 171–173.

- Guillermo M Rosa, María L Elizondo (2014) Use of a gesture user interface as a touchless image navigation system in dental surgery: Case series report. Imaging Sci Dent 44(2): 155–160.

- Ruppert GC, Reis LO, Amorim PH, De Moraes TF, Da Silva JV (2012) Touchless gesture user interface for interactive image visualization in urological surgery. World J Urol 30(5): 687–691.

- Lövquist E, Shorten G, Aboulafia A (2012) Virtual reality-based medical training and assessment: the multidisciplinary relationship between clinicians’ educators and developers. Med Teach 34(1): 59-64.

- Peters T.M., Linte C.A., Yaniv Z, Williams J (2018) Mixed and Augmented Reality in Medicine. CRC Press. Series: Series in Medical Physics and Biomedical Engineering pp. 888.

- https://vsi.health/en/

- Jacob MG, Wachs JP, Packer RA (2012) Hand-gesture-based sterile interface for the operating room using contextual cues for the navigation of radiological images. J Am Med Inform Assoc 20: 183–186.

- Wachs JP, Stern HI, Edan YG, Handler M, Feied J (2008) A gesture-based tool for sterile browsing of radiology images. J Am Med Inform Assoc 15(3): 321–323

- Ebert LC, Hatch G, Ampanozi G, Thali MJ, Ross S (2012) You can't touch this: touch-free navigation through radiological images. Surg Innov 19(3): 301–307.

- Citardi MJ, Batra PS (2007) Intraoperative surgical navigation for endoscopic sinus surgery: rationale and indications. Otolaryngol Head Neck Surg 15(1): 23-27.

- Klapan I, Šimičić Lj, Rišavi R, Bešenski N, Pasarić K, et al. (2002) Tele-3D-Computer Assisted Functional Endoscopic Sinus Surgery: new dimension in the surgery of the nose and paranasal sinuses. Otolaryngol Head Neck Surg 127: 549-557.

- Weichert F, Bachmann D Rudak B, Fisseler, D (2013) Analysis of the accuracy and robustness of the leap motion controller. Sensors (Basel) 13: 6380–6393.

- Ben Joonyeon Park, Taekjin Jang, Jong Woo Choi, Namkug Kim (2016) Gesture-Controlled Interface for Contactless Control of Various Computer Programs with a Hooking-Based Keyboard and Mouse-Mapping Technique in the Operating Room. Comput Math Methods Med 3: 1-7.

- Caversaccio M, Gerber K, Wimmer W, Williamson T, Anso J, et al. (2017) Robotic cochlear implantation: surgical procedure and first clinical experience. Acta Otolaryngol 137(4): 447-454.

- Raos P, Klapan I, Galeta T (2015) Additive manufacturing of medical models – applications in rhinology. Coll Antropol 39(3): 667-673.

- Burgin M, Eberbach E (2012) Evolutionary automata: Expressiveness and convergence of evolutionary computation. Comput J 55: 1023-1029.

- Burgin M (2017) Swarm Superintelligence and Actor Systems. Swarm Superintelligence and Actor Systems. Int J Swarm Intelligence Evolutionary Computation 6(3): 1-13.

- Mayer JD, Salovey P (1993) Нe intelligence of emotional intelligence. Intelligence 17: 433-442.

Research Article

Research Article