Abstract

Objectives: This study presents the use of our original contactless interface as a plug-in application for OsiriX-DICOM-viewer platform using a hardware sensor devicecontroller that supports hand/finger motions as input, with no hand contact, touching, or voice navigation. It would be possible to modify standard surgical parameters in the fly gesture-controlled incisionless surgical interventions.

Methods: The accuracy of computer-generated models was analyzed according to T. Galeta/2017. Our original special plug-in-application provided different types of gestures for three-dimensional-virtual reality navigation. Our hardware sensor device controlling the system without touching any other device served as an interface for camera positioning in three-dimensional virtual endoscopy-views. The impression of panoramic three-dimensional volume rendering-viewing was given by pivoting the camera around a focus fixed on the object.

Results: This novel technique enables surgeons to get complete and aware orientation in the operative field, where ‘overlapping’ of the real and virtual anatomic models is inevitable. Our human mind and understanding of this new surgery work by creating completely new models of human behavior and understanding spatial relationships, along with devising assessment that will provide an insight into our human nature. Any model and/or virtual model of the surgical field is defined as it actually exists in its natural surroundings.

Conclusion: We offer an alternative to closed software systems for visual tracking, with an initiative for developing the software framework that will interface with depth cameras, with a set of standardized methods for medical applications such as hand gestures and tracking, face recognition, navigation, etc. This software should be an open source, operation system agnostic, approved for medical use and independent of hardware. Comparison with previous doctrine in human medicine clearly indicates that both preoperative/intraoperative manipulation with three-dimensional-volume rendering slices of the human anatomy per viam touchless surgical navigation system with simulation of virtual activities has become reality in the operation room.

Keywords: Contactless Surgery; Gesture Control; Voice Commands; Virtual Endoscopy; Virtual Surgery; Swarm Intelligence

Abbreviations: AR: Augmented Reality; CAS: Computer Assisted Surgery; DICOM: Digital Imaging and Communications in Medicine; ENT: Ear, Nose, Throat; FESS: Functional Endoscopic Sinus Surgery; HW: Hardware; IT: Information Technology; LM: Leap Motion; MacOS: Operating Systems Developed and Marketed by Apple; MIS: Minimally Invasive Surgery; MRI: Magnetic Resonance Imaging; MSCT: Multislice Computer Tomography; NCAS: Navigation Computer Assisted Surgery; NESS: Navigation Endoscopic Sinus Surgery; OMC: Ostiomeatal Complex; OR: Operation Room; SI: Swarm Intelligence; SW: Software; SWOT: Strengths, Weaknesses, Opportunities, Threats; TIM: Total Imaging Matrix Technology; TM: Telemedicine; TS: Telesurgery; VC: Voice Command; VE: Virtual Endoscopy; VS: Virtual Surgery; VR: virtual reality; VRen: Volume Rendering; VW: Virtual World; Xcode IDE: The Center of the Apple development Experience; 2D/3D/4D: Two- Dimensional/Three-Dimensional/Four-Dimensional; 3DVR: Three-Dimensional Volume Rendering

Introduction

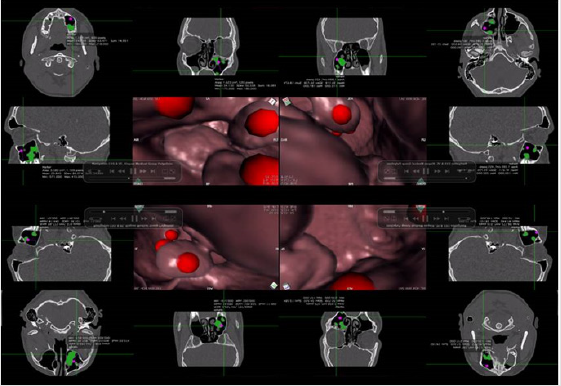

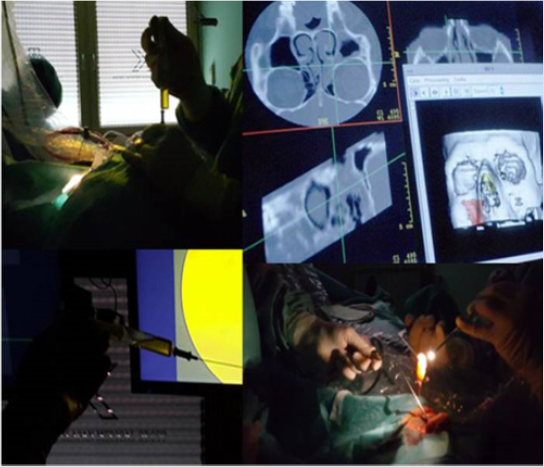

“The right treatment to the right patient at the right time is the simplest definition of personalized medicine of the 21st century, all with a view to making an early diagnosis and deciding on an optimal treatment option” (D. Primorac). The best way to predict the future is to create it ourselves. Today, our understanding of the anatomy in humans could be deceived, where we can replace the true reality with the simulated reality [1-3] that enables precise, safer and faster diagnosis [4], as well as surgery, creating an impression of another ‘external’ world around the man [5,6]. Through the concept of simulated reality, almost the same environment can be achieved as in true reality. Traditionally, simulated reality is considered as an extension of virtual reality (VR), which is widely used in different telemedicine (TM) platforms, but in our work (Figure 1), we would like to elaborate the need of improving these VR tools in order to get better user experience both in preoperative virtual analysis and during the surgery. With all well-known benefits of adopting VR tools in surgeries, such as better planning, high quality data analysis and simulation, we would like to introduce our original proposal of touchless controlling VR tools in operation room (OR) to come as close as possible to the concept of simulated reality, as we have reported previously [2,5].

Figure 1: 2D/3D visualization of the patient’s anatomic ROI-slices, supported by VE (preoperatively) and VS (intraoperatively). Navigating through narrow pathways in VE, we noticed that the camera could stay in the tissue [2,5], thus enabling a substantially different understanding of spatial relations (as exemplified by the nose/sinuses) between 2D and 3D images of human anatomy.

But, in view of the possible criticisms, even in amicable discussion with colleagues, our innovative “on the fly gesturecontrolled” ear, nose and throat (ENT) diagnostics and surgery could be understood as personal reflection of the standard wellknown navigation-noninvasive surgery, which requires additional citations for verification of its declaration of originality. That is why in our activities we employed the following:

a. Pre- and postoperatively the most widely used Digital Imaging and Communications in Medicine (DICOM), with advanced post-processing techniques in two-dimensional (2D)/three-dimensional (3D), as well as four-dimensional (4D) navigation (OsiriX MD);

b. Hardware (HD)-sensor device that supports hand and finger motions as input, thus requiring no hand contact or touching (RealSenseIntelDepthCamera and/or Leap Motion (LM Inc., San Francisco, CA, USA); and

c. Our original, specially designed software (SW) that integrates LM-controller with medical imaging systems [2,5] completely different from some products already described in medical literature (Figure 2).

Figure 2: Preoperative and intraoperative virtual analysis of the patient’s anatomy/pathologic tissue, with very clear distinction between the interpretation of gestures (LM) in 3D-VRen and VE per viam ‘on the fly gesture-controlled’ different parameters/ manner of movements, while navigating throughout the virtual space.

Rather than the fictional virtual world (VW), our 3D volume rendering (VRen) solution is based on real inputs based on gesture control and manner of movements, enhancing the simple VRen with the real needs according to the awareness of medical specialist. Accordingly, using our original, specially designed SW that integrates LM-controller with medical imaging systems, invented by our information technology (IT), we can very precisely and successfully (pre- and perioperatively) ‘assess’ all anatomic relations within the patient’s head (taken with permission of Klapan Medical Group Polyclinic, Zagreb, Croatia, EU; www.poliklinika-klapan.com). In this way, as seen previously in similar medical fields [6,7] in our operation room (OR) we wanted to create an impression of virtual perception in the new VW of all elements in the real anatomy of the patient’s head, with completely new understanding of the given position of the region of interest (virtual perception) [8] (Figure 3).

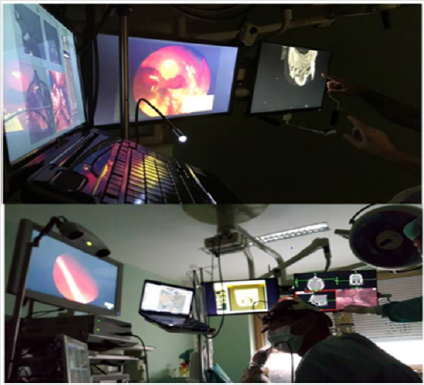

Data processing and visualization is done in real time enabling multiple interactions with the simulation. Additionally, we tried to substitute artificially generated sensations for the real standard daily information received by our senses, as we have reported previously [9]. We have minimized surgeon distraction, intervention time and anesthesia time while interacting with the positioning system per viam ‘different types of gestures’, and our special original plug-in (designed by our Bitmedix IT team; www. bitmedix.com) application for DICOM viewer; “navigation through” in the VW, with the use of LM sensor as an interface for camera positioning in 3D-virtual endoscopy (VE)/virtual surgery (VS) [1,2] integrating speech recognition as a voice command (VC) solution in an original way [5]. Our gestures provided very easily the interaction with the real-world elements, pre- and intraoperatively, per viam natural movements while performing navigation (VE/ VS) in the patient’s head during the surgery/telesurgery (TS) [2] (Figure 4). In this way, we enabled accessing surgery crucial data without any need to touch any kind of interface in order to mitigate the risk of infections in OR.

Figure 3: We prefer the system in the OR which guides the surgeon through the SW, with no need ‘to press any button’.

Figure 4: NESS/tele-NESS, 2D-MSCT unlimited movie and VE/LM-surgery in ‘nonexisting virtual space’. In the OR, we also integrated speech recognition as a VC-solution.

Material and Methods

Case Report

Patient: male, born 1964. Status: all maxillary teeth treated by combined fixed-mobile prosthetic approach ten years before. Present status nonfunctional because of teeth 18, 15, 14, 13, 11, 21 and 22 dysfunctions as carriers due to grade 2 mobility. Teeth 15, 14, 13 and 21 were treated endodontically ten years before. Current diagnostics (3D-multi-slice computer tomography (MSCT), Magnetic Resonance Imaging (MRI), x-ray) revealed periodontal fissures and periapical shadows, indicative of the presence of infection and general prosthetic overload upon the remaining teeth as bridge carriers. Therefore, the mentioned teeth (15, 14, 13) caused de novo formation of pathologic tissue (homogeneous shadowing) in the right maxillary sinus. Mandibular teeth were treated and free from visible pathologic signs. The rest of maxillary sinus and sinus ostium towards the middle nasal meatus was normal, and so was ostiomeatal complex (OMC) ipsilaterally (Figure 5).

Figure 5: MSCT diagnostics showed the presence of oroantral fistula at the site of previously extracted tooth and partial prolapse of a large cystic neoplasm from maxillary sinus through oroantal fistula to the oral cavity.

Our Contactless Diagnostics and Surgery

There is a great potential in medical utilization of virtual and augmented reality (AR), which has not yet been fully explored. These new interfaces introduced in the computer systems increase the capabilities of interacting with the rendered volumes and makes them more convincing in the real world. This enhanced sense of inclusion eliminates the need of imagination that depends on the individual perception and knowledge of the specific situation. Because of its very high precision, LM is one of the good candidates for medical use in cases where the surgeon uses a 3D-rendered model. The examples of such integration can be found in the literature and these solutions are often oriented towards the multiplatform use. Using the message hooking techniques, the recognized gestures can be directly mapped to the platform-specific functions, mainly keyboard shortcuts, and the hand position can be translated into the mouse position on the screen.

In practice, the quality of the LM device in these cases enables good user experience, but the additional complexity from nonspecific platform integration results in a steep learning curve because the interface implementation is not fully natural to humans (mouse mimicking). This characteristic is also decreasing the quality of space perception in 3D-virtual rendered space, which is unacceptable in medical use, especially in the OR environment. While these solutions are addressing the issues of the standard computer system interfaces (like mouse and keyboard) due to the potential risks of bacterial infection, they do not highlight the benefits of space perception and orientation that is provided with VR devices like LM-sensor. In our solution, we propose integration at the platform level, distinguishing the contactless interface from others.

We have found that creating the application specific functions dedicated only to the events from VR-sensor simplifies the interaction and gives more sense of control. The performance gains are nearly incomparable to the solutions with message hooking. The first reason is that other functions from the system are not hijacked by the sensor, thus allowing simultaneous use of other interfaces. Furthermore, this solution enables more advanced use of sensor functionalities such as triggers positioned in the working space, using the orbit and zoom simultaneously with only one hand and using advanced two-hand gestures for the functions like crop.

Medical Imaging

In all our studies, we used Siemens MAGNETOM Espree 1.5T MRI and MSCT Scanner SOMATOM Force System (Agram Special Hospital, Zagreb, Croatia, EU; https://www.agram-bolnica.hr/ oprema/), and thanks to its advanced Total Imaging Matrix (TIM) technology which provides more detailed data because it has more high-channel coils than other comparable devices and exploits maximum signal-to-noise ratio, we are able to use this advantage in achieving more accurate modifications of standard surgical parameters and navigation during the previously described innovative navigation-noninvasive contactless gesture-controlled surgery. Generated data are of appropriate quality to be extended with additional usage of AR/VR tools. For preoperative image reconstruction and display, we use Siemens syngo applications to maximize standardization in slice positioning, and specifically syngo BLADE motion-insensitive Turbo Spin Echo sequence for motion correction.

Results

Technical and implementation details of our novel contactless hand-gesture-noninvasive applications in diagnostics and noninvasive surgery. For the application demo, it was decided to develop a plugin for the OsiriX platform that enables natural gesture interface for the VRen and VE-viewer. The plugin is written in the Swift language for the MacOS platform. To gain control over the OsiriX, the provided application interface framework is used where the functions of the OsiriX platform can be accessed via available header files. As the OsiriX is completely written in Objective C, the bridging headers are created to import the functions to Swift. Also, the Leap-SW development kit was included in the project to get functions of the hand gesture and position recognition.

The first problem encountered was that the functions of the VRview, which were needed to create natural interface were protected by the class. To resolve this issue, a wrapper class was created, extending the VR-view class and providing an interface with static methods to gain access to the OsiriX VR-view controller. Then an instance of the LM-controller was created and the callbacks responding to the device connection events were set. When the device is connected, the frame handler starts to process the information received from the device. Two different frame handlers have been developed, one for the VRen use-case and one for the VE. The frame handlers are triggered when the right hand is present with the grab strength of 0.05. Then all the inputs are buffered to avoid over-sensitivity of the sensor.

In the VRen, the x-axis of the vector received from the sensor is translated to the azimuth of the camera, y-axis to the elevation, and z-axis to the zoom. This gives the effect of orbiting over the center of the object. Also, to get better performance, the level of details is lowered when the frame handler is triggered. When the hand exits the sensor working area, the level of details is returned to the maximum value. For the VE, the idea was to achieve a fly-through effect. Firstly, the x- and y-axes are used as the yaw and pitch of the camera. The z-axis is used to increase or decrease the total amount of moving speed. The OsiriX-application programming interface is used to get the current direction and position of the camera. The position of the camera is then translated by the total speed amount in the current direction of the camera. The same translation is also applied to the focal point of the camera.

The plugin can be compiled in the Xcode IDE on MacOS and then easily installed on the OsiriX platform. After installation, the plugin exists in OsiriX environment waiting for the device to connect. Once the device is connected, the frame handler is waiting for the VR or VE view to be opened. When the view is opened, the sensor is ready to use. This interface is not interfering with any other function of the OsiriX platform and it can be used simultaneously with the classic mouse interface.

Discussion

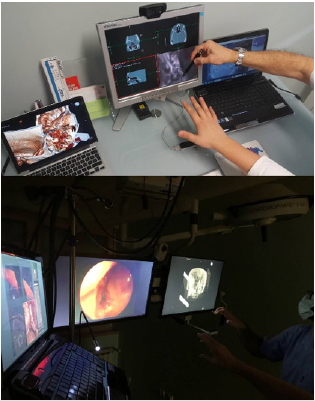

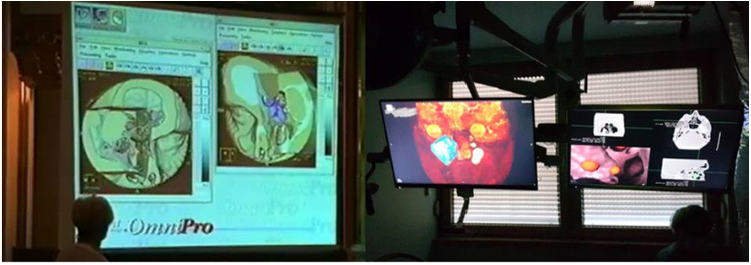

“There is no reality. Only our personal view on the reality”, even in the operation room. If this view of the surrounding world is correct, it is justified for us as physicians to wonder whether our consciousness/awareness is thus creating a new reality or new forms of the reality in diagnostics and surgery. As the formed consciousness “defines our overall comprehension and, briefly, everything that exists, we cannot get behind it” (Max Planck). In the world of modern medicine, this should be achieved through the integral perception of all newly formed parameters in the novel, to date unknown VW of diagnostics and surgery. How can we make the real-time visualization of 3D-VE motions and animated images of the real surgery in our ORs more realistic? Do our colleagues and/or physicians believe that it can be done by pre-(intra)-operative use of DICOM-viewer with hardware (HW)-sensor device that supports hand and finger motions as input, which requires no hand contact or touching (‘touch-free commands’), as demonstrated during one of our routine preoperative diagnostic procedures (Figure 6).

Figure 6: LM 3D-observing user preoperative and intraoperative interaction with the application (as noticed in our up-to-date activities), we observed the need for voice command that could reveal additional parameters and application functionalities using a dashboard interface that could either overlay the current view or be shown on a separate computer screen if the environment allows it.

Even more, this article can be possibly understood by beginners in ENT surgery or colleagues who are not familiar with the application of modern technology in OR, as a review written as the author/authors personal view that states only arguments favoring the topic but adding no new information to the literature or providing clinical insights that are novel and would alter patient treatment. On the contrary, we submit an argumentative essay, not a “case report paper” or “description of standard therapy and knowledge”, and we are focused on the development of our original special SW-plugin application for digital imaging and communications in medicine-viewer platform (which cannot be found on the market), enabling users to use LM-sensor as an interface for camera positioning in 3D-VRen and VE-views, which integrate speech recognition as a VC-solution in an original way. This approach has not yet been used in rhinosinusology or ENT, and to our knowledge, not even in general surgery.

For this purpose, prior to daily routine use of functional endoscopic sinus surgery (FESS)/computer assisted surgery (CAS)/ navigation computer-assisted surgery (NCAS), in the diagnosis and surgery (e.g., ENT), and considering our long-standing experience in this segment of human medicine in the field of minimally invasive surgery (MIS), we enquired very critically the benefits of its use, as well as further experimental-clinical development of this type of navigation-digital imaging and communications in medicine viewer- LM-VE/VS5. Of course, we know that using this novel approach in the 21st century medicine would enable all systems of simulated reality to share the ability and offer the user/tele-user [10,11] (Figure 7) to move and act within the apparent worlds instead of the real world, as described in our previous publications6 and demonstrated in the OR during operative procedure on a patient with maxillary sinus cyst protruding to the oral cavity through the oroantral fistula.

Figure 7: Five different monitors in our OR: NESS, operation field, tele-remote site, MSCT/MRI 2D-black and white unlimited movies (coronal/axial/sagittal projections), and Osirix-LM monitor with different fields of ROIs with 3D-VE motions and animated images of the real surgery.

In line with our previous experimental studies published in the past two decades [1,10,12] and experiences reported by other authors [13-15], our primary objective was to establish whether this new approach in visualization of human anatomy would avoid the risks associated with real endoscopy and minimize procedural difficulties when used prior to performing an actual endoscopic examination [16]. Therefore, bearing in mind the definition of VR (“impression of being present in a virtual environment, such as virtual/tele-VE of the patient’s head that does not exist in reality is called VR”, we tested the possibility to derive spatial cross-sections at selected anatomic cutting planes in rhino-surgery, which would provide an additional insight into the internal regions observed [6]. All operations were contactless and gesture-based and recognized using the LM sensors (AVI/preoperative diagnosis and motion gestures in our OR; http://www.bitmedix.com/) and nonimage operations such as selection of the active target image window or the type of operation were done by pressing a combination of three buttons on the foot pedal.

The intraoperative image of sinus and oro-dental region anatomy in the mentioned patient showed the use of this new approach in surgery to be highly appropriate, even in such a simple example of the pathologic substrate in ENT surgery. Just imagine that during the operation, the rhinosurgeon can simply ‘navigate’ through the oroantral fistula canal (which has been inconceivable to date), perform 3D-assessment of its relation with the roots of other teeth within the maxillary bone, visualize the fistula ostium mucosa in maxillary sinus and its relation to the rest of sinus mucosa, ‘enter’ the cyst and observe its extent, composition of its content, assess the medically justified degree and range of pathologic cystic tissue removal while preserving the healthy and other vital anatomic elements of the oroantral region of the patient’s head. Performing the multiply repeatable different forms of virtual diagnostics very fast, followed by VE and finally VS, offering the possibility of repeating them endlessly, we can ‘copy’ the entire operative procedure conceived as performed in the VW of the patient’s head anatomy, which will then be ‘copied’ in the same way in the future real operation on the real patient.

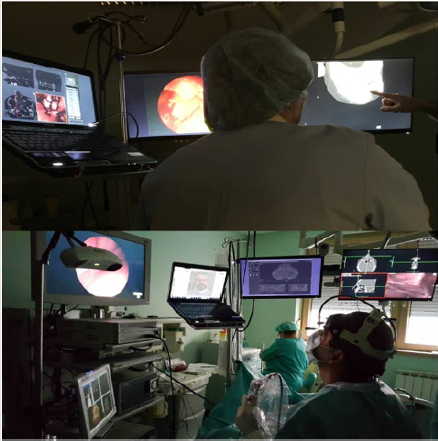

All this must be possible to perform without interrupting the real operative procedure, just by the surgeon’s view, without moving his sight from the endoscope inserted into the body of the patient lying on the operative table, simply by a few free motions of his hand, without touching any surface in the OR. Our experience shows that all this can be done easily during the operative procedure, even with some preoperative analysis if necessary (Figures 4 & 7) by use of the navigation noninvasive ‘on the fly’ gesture-controlled incisionless surgical interventions (e.g., based on our original Apple-based Osirix-LM system). Thus, we can state with certainty that the use of this system is highly desirable for enabling contactless ‘in the air’ surgeon’s commands, precise orientation in space, as well as the possibility of directing real patient operation by ‘copying’ the previously performed VS (with or without navigation with 3D-digitalizer/’robotic hand’) in the nonexisting surgical world (Figure 8).

Figure 8: 3D view of the nose, paranasal sinuses, as well as oral region, with different views of intraoperative findings from the patient’s maxillary sinus.

Figure 9: VE, VS and the monitor with DICOM viewer-LM, used also for telemedicine purposes. By contactless motions of the surgeon’s hand, we can ‘understand’ the positioning of pathologic tissue, perform their multiple virtual ‘operation/removal’ from the anatomic field where they are really found, and reconsider their relationship and marginal points against other vital anatomic structures of the patient’s head. All this is possible by simple contactless motions of the surgeon’s hand, as demonstrated in our OR.

According to our long-standing use of this and similar approaches in clinical practice (diagnostics and surgery/TS) [12,17,18] and experiences reported by other authors [19-22], we have realized that it is quite simple to enable the animated image of the course of surgery/telesurgery be created in the form of navigation, i.e. the real patient operative field fly-through, as it has been done from the very beginning (since 1998) in our computer assisted-TSs [6] (Figure 9). However, do physicians really believe that improvement of the accuracy of 3D-models generated from 2D-medical images is of greatest importance for the sustainable development of AR and VR in OR? Additionally, one can conclude from „the standard surgical experience“ that in terms of future training and surgical practice, information technology developments in OR might include a VS within the same guide user interface using the personal computer-mouse as the scalpel or surgical knife, as commented in some papers [23] (without discussion about sterility in the OR?).

Of course, the previously mentioned possible criticisms of the strategic planning process of our newest ENT-contactless surgery have already been discussed [5,11,24]. As we have demonstrated, the navigation-OsiriX-LM suggests that real and virtual objects definitely need to be integrated by use of real ‘in the air’ control with simulation of virtual activities, which requires real-time visualization of 3D-VE motions [5,11], following the action of the surgeon that may be moving in the VR-area2. The rules of behavior in this imaginary world are very precisely and simply defined [25] per viam region of interest on 3D volume rendered MRI/MSCT slices (static and interactive dynamic 3D models). Additionally, we conclude as follows:

a. Impeccable knowledge of the head anatomy (or other parts of the human body if another type of surgery is in place) [2] can be achieved in OR much better than just per viam ‘voice navigation’ or by use of various information technology systems in surgery (keyboard, mouse, etc.), although different experiences have also been reported (Rolf Wipfli et al., PLoS One, 2016);

b. We have very clear ‘natural’ understanding of the pathology in the nonexisting VW (using rotation and changing observation point, separation and segmentation of various structures, with visualization of various structures in different colors) [26,27];

c. The real and the virtual operation fields are ‘fused’ in real time [5,28] with an acceptable degree of structure transparency, exclusion of particular structure visualization from the model, and model magnification and diminution [26,29]; and

d. Navigation-OsiriX-LM is a very helpful assistant but not a replacement system, especially for inexperienced surgeons (Figure 10).

Figure 10: Preoperative diagnostics/tele-diagnostics in surgery planning, with navigation through ‘the route of the endoscope’ in our patient with a cyst protruding from maxillary sinus through the oroantral fistula to the oral cavity. In this case, a realtime- system updated the 3D-graphic visualization, with movements of the user (sufficient visualization is always shown on the screen).

However, upon critical scientific consideration of our assessments, we must also pose the following questions:

1. Does the ‘predictability’ of the future operation (no matter how surgically simple or complex) as presented above, unknown in surgery before, enable precise translation, i.e. ‘mapping’ of the strictly determined anatomic site in the real patient as previously detected on the virtual 3D-model?

2. Does this innovative noninvasive surgery of the future, previously realized in the virtual nonexisting space, really enable performing the future real operation within the spatial ‘error’ of less than 0.5 mm (e.g., such as the simplest analysis of the accuracy of the LM-controller) [27]?

3. What prerequisites, realizable in the future, should be realized in our ‘on the fly’ gesture-controlled and incisionless VS interventions for the eventual utilization to meet the most demanding requirements in the OR?

4. What should be developed in this newly formed surgical philosophy ante finem for this approach to be easy for use in daily surgical routine, better and, in line with human nature, superior to the currently widely accepted standard navigation surgery (e.g., navigation endoscopic sinus surgery (NESS) in ENT), while being less expensive than robotic surgery?

5. Our intention is to offer an alternative to closed SW systems for visual tracking, and we want to start an initiative to develop the SW framework that will interface with depth cameras and provide a set of standardized methods for medical applications such as hand gestures and tracking, face recognition, navigation, etc. This SW should be:

a. An open source, operation system agnostic, approved for medical use, independent of HW, and

b. In the future, part of medical Swarm intelligence (SI) decentralized, self-organized systems, in a variety of VR-fields in clinical medicine and fundamental research (motion gestures in OR and preoperative diagnosis demo) [30] and

6. In our future plans, we will discuss additional different aspects that are currently of great interest regarding the future concept of MIS in rhinology and contactless surgery, as suggested by some of our colleagues, such as:

a. Computational fluid dynamics [30] (“the set of digital imaging and communications in medicine images can be analyzed, e.g., pressure and flow rates in each nostril), so the final result of the surgeon is evaluated not only based on the patient’s feeling but also on airflow characteristics”; and

b. Worldwide accepted training of future surgeons per viam revolutionary techniques/advances in VR.

The presented ideas for the development of the previously described system for “gesture-controlled incisionless surgical interventions” are presented in our next scientific paper entitled “Does our current technological advancement represent the future in innovative contactless noninvasive sinus surgery in rhinology? What is next?”

Conclusion

Considering the multitude of long-standing activities of our medical institution/team, implementation of novel treatment modalities, some of these invented and introduced by our team, the high level of treatment success and patient satisfaction, and above all very efficient performance, we feel free to propose some useful suggestions, as follows:

a. Novel treatment modalities and technologies should be introduced and incorporated in medical care (navigation 3D surgery/tele-3D surgery, modification of the standard surgical procedures per viam on the fly gesture-controlled incisionless surgical interventions, robotics),

b. The use of new procedures must be ethical, as well as

c. Cost-effective SWOT (strengths, weaknesses, opportunities, threats) analysis should be performed for any medical institution prior to using new expensive technologies,

d. Along with appropriate business relationships, the leading officials of medical institutions should maintain a true humane relationship with their staff members,

e. Novel telemedicine technologies should be employed whenever possible, led by the 21st century medicine basic slogan “send/exchange data, not patients”,

f. We do believe that following these criteria is the main reason for the successful medical and business performance of our, or any other, medical institution.

Highlights

a. Our original, specially designed SW integrates DICOM and HW sensor device controller that supports hand and finger motions as input

b. Our SW would be an open source, operation system agnostic, approved for medical use and independent of HW

c. This kind of contactless surgery would be easy for use in daily surgical routine, better and in line with human nature, superior to the currently widely accepted standard navigation surgery

d. We offer an alternative to closed SW systems for visual tracking, which in the future will become part of medical SI selforganized systems in a variety of VR-fields in clinical medicine

Acknowledgment

The authors are grateful to Professor Heinz Stammberger, M.D.(†), Graz/Austria/EU, for his helpful discussion about NESS and contactless surgery (February/2018), and Dr. Armin Stranjak, Lead software architect at Siemens Healthcare, Erlangen, Germany, EU, for his discussion about MSCT and MRI nose/sinus scans.

References

- Klapan I, Šimičić Lj, Rišavi R, Pasari K, Sruk V, et al. (2002) Real time transfer of live video images in parallel with 3D-modeling of the surgical field in computer-assisted telesurgery. J Telemed Telecare 8(3): 125-130.

- Klapan I, Duspara A, Majhen Z, Benić I, Kostelac M (2019) What is the future of minimally invasive sinus surgery: computer-assisted navigation, 3D-surgical planner, augmented reality in the operating room with 'in the air' surgeon's commands as a “biomechanics” of the new era in personalized contactless hand-gesture non-invasive surgeon-computer interaction? Biomed J Sci Technical Research 19(5): 14678-14685.

- Guillermo M Rosa, María L Elizondo (2014) Use of a gesture user interface as a touchless image navigation system in dental surgery: Case series report. Imaging Sci Dent 44(2): 155-160.

- Tan JH, Chao C, Zawaideh M, Roberts AC, Kinney TB (2013) Informatics in Radiology: developing a touchless user interface for intraoperative image control during interventional radiology procedures. Radiographics 33(2): E61-70.

- Klapan I, Duspara A, Majhen Z, Benić I, Kostelac M, et al. (2017) What is the future of minimally invasive surgery in rhinology: marker-based virtual reality simulation with touch free surgeon's commands, 3D-surgical navigation with additional remote visualization in the operating room, or ..? Front Otolaryngol Head Neck Surg 1(1): 1-7.

- Klapan I, Raos P, Galeta T (2013) Virtual Reality and 3D computer assisted surgery in rhinology. Ear Nose Throat 95(7): 23-28.

- Ruppert GC, Reis LO, Amorim PH, De Moraes TF, Da Silva JV (2012) Touchless gesture user interface for interactive image visualization in urological surgery. World J Urol 30(5): 687-691.

- Hötker AM, Pitton MB, Mildenberger P, Düber C (2013) Speech and motion control for interventional radiology: requirements and feasibility. Int J Comput Assist Radiol Surg 8(6): 997-1002.

- Klapan I (2011) Application of advaced Virtual Reality and 3D computer assisted technologies in computer assisted surgery and tele-3D-computer assisted surgery in rhinology. In: Kim JJ (Eds.). Virtual Reality, Vienna: Intech p. 303-336.

- Klapan I, Šimičić Lj, Rišavi R, Bešenski N, Pasarić K, et al. (2002) Tele-3D-Computer Assisted Functional Endoscopic Sinus Surgery: new dimension in the surgery of the nose and paranasal sinuses. Otolaryngol Head Neck Surg 127(6): 549-557.

- Klapan I, Vranješ Ž, Prgomet D, Lukinović J (2008) Application of advanced virtual reality and 3D computer assisted technologies in tele-3D-computer assisted surgery in rhinology. Coll Antropol 32(1): 217-219.

- Klapan I, Šimičić Lj, Bešenski N, Bumber Ž, Janjanin S, et al. (2002) Application of 3D-computer assisted techniques to sinonasal pathology. Case report: war wounds of paranasal sinuses with metallic foreign bodies. Am J Otolaryngol 23(1): 27-34.

- Caversaccio M, Gerber K, Wimmer W, Williamson T, Anso J, et al. (2017) Robotic cochlear implantation: surgical procedure and first clinical experience. Acta Otolaryngol 137(4): 447-454.

- Citardi MJ, Batra PS (2007) Intraoperative surgical navigation for endoscopic sinus surgery: rationale and indications. Otolaryngol Head Neck Surg 15(1): 23-27.

- Lövquist E, Shorten G, Aboulafia A (2012) Virtual reality-based medical training and assessment: the multidisciplinary relationship between clinicians educators and developers. Med Teach 34(1): 59-64.

- Knezović J, Kovač M, Klapan I, Mlinarić H, Vranješ Ž, et al. (2007) Application of novel lossless compression of medical images using prediction and contextual error modeling. Coll Antropol 31(4): 315-319.

- Raos P, Klapan I, Galeta T (2015) Additive manufacturing of medical models-applications in rhinology. Coll Antropol 39(3): 667-673.

- Klapan I, Raos P, Galeta T, Kubat G (2016) Virtual reality in rhinology-a new dimension of clinical experience. Ear Nose Throat 95(7): 23-28.

- Yael Kopelman, Raymond J, Lanzafame, Doron Kopelman (2013) Trends in evolving technologies in the operating room of the future. JSLS 17(2): 171-173.

- Wachs JP, Stern HI, Edan YG, Handler M, Feied J, et al. (2008) A gesture-based tool for sterile browsing of radiology images. J Am Med Inform Assoc 15(3): 321-323.

- Ebert LC, Hatch G, Ampanozi G, Thali MJ, Ross S (2012) You can't touch this: touch-free navigation through radiological images. Surg Innov 19(3): 301-307.

- Caversaccio M, Gerber K, Wimmer W, Williamson T, Anso J, et al. (2017) Robotic cochlear implantation: surgical procedure and first clinical experience. Acta Otolaryngol 137(4): 447-454.

- Frank Ito D, Kimbell J, Laud P, Garcia G, Rhee J (2014) Predictingpost-surgery nasal physiology with computational modeling: current challenges and limitations. Otolaryngol Head NeckSurg 151(5): 751-759.

- Zachow S, Muigg P, Hildebrandt T, Doleisch H, Hege H (2009) Visual exploration of nasal airflow. IEEE Trans Vis Compute Graph 15(6): 1407-1414.

- Jacob MG, Wachs JP, Packer RA (2012) Hand-gesture-based sterile interface for the operating room using contextual cues for the navigation of radiological images. J Am Med Inform Assoc 20(e1): e183-186.

- Klapan I, Šimičić Lj, Rišavi R, Bešenski N, Bumber Ž, et al. (2001) Dynamic 3D computer-assisted reconstruction of metallic retrobulbar foreign body for diagnostic and surgical purposes. Case report: orbital injury with ethmoid bone involvement. Orbit 20(1): 35-49.

- Weichert F, Bachmann D, Rudak B, Fisseler D (2013) Analysis of the accuracy and robustness of the leap motion controller. Sensors (Basel) 13(5): 6380-6393.

- Klapan I, Vranješ Ž, Prgomet D, Lukinović J (2008) Application of advanced virtual reality and 3D computer assisted technologies in tele-3D-computer assisted surgery in rhinology. Coll Antropol 32(1): 217-219.

- Ben Joonyeon Park, Taekjin Jang, Jong Woo Choi, Namkug Kim (2016) Gesture-Controlled Interface for Contactless Control of Various Computer Programs with a Hooking-Based Keyboard and Mouse-Mapping Technique in the Operating Room. Comput Math Methods Med 3: 1-7.

- Neil Bailie, Brendan Hanna, John Watterson, Geraldine Gallagher (2006) An overview of numerical modelling of nasal airflow. Rhinology 44(1): 53-57.

Research Article

Research Article